EEB 603 – Reproducible Science

Chapters

Sven Buerki - Boise State University

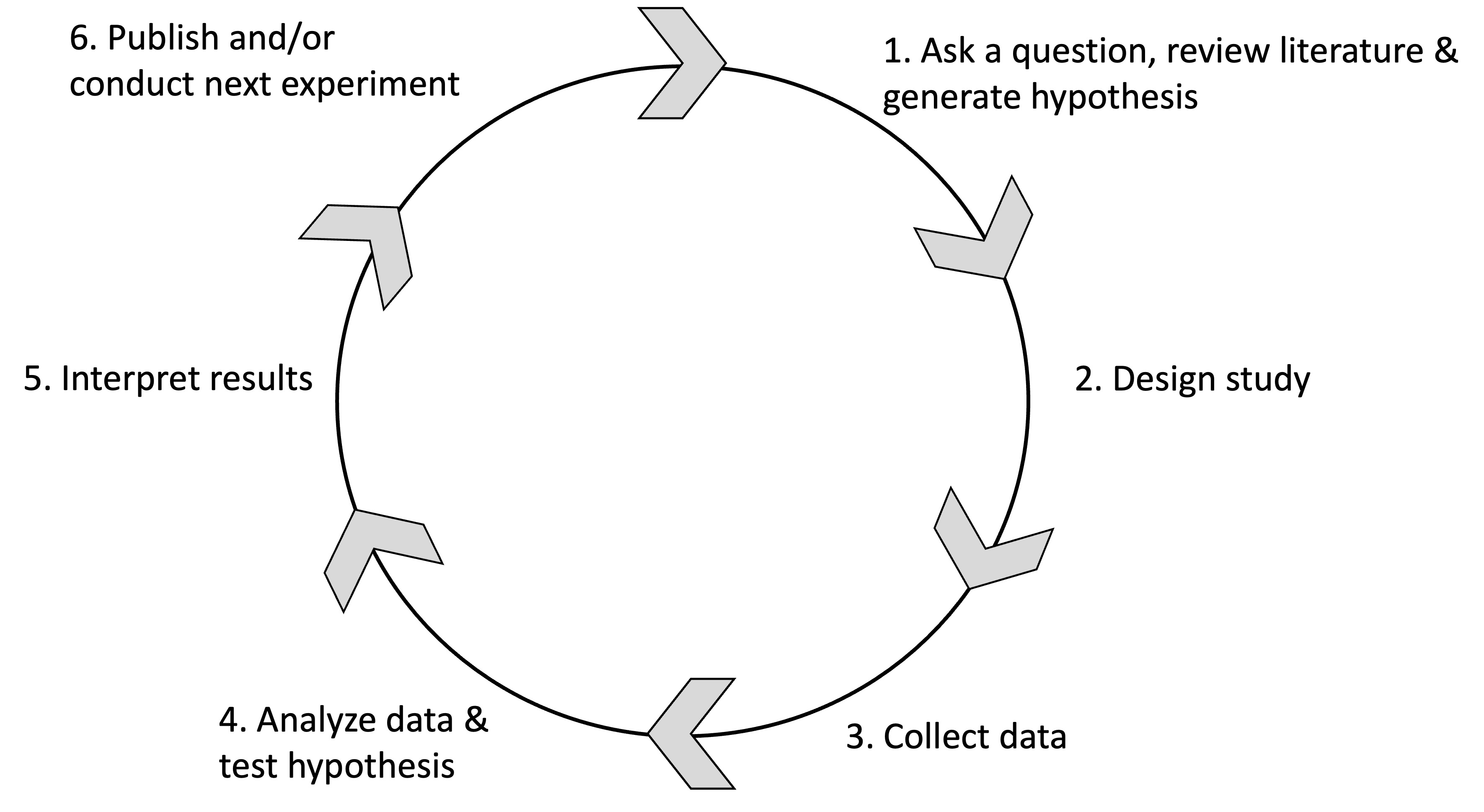

2025-10-14

1 Syllabus

Please see this webpage for more details on the Syllabus.

2 Chapter 1

2.1 Goal

In this chapter, we engage in group activities and literature reading to explore the concept of reproducible science, including its challenges, benefits, and practical implementation in research. Overall, this chapter aims to provide a broader understanding of reproducible science and the context that underpins the material covered throughout the course.

The chapter is subdivided into two parts:

2.2 Learning Outcomes

- Understand the definitions of reproducibility and replicability in scientific research

- Identify the importance of reproducible science for research integrity and advancements

- Recognize the challenges to achieving reproducibility and potential solutions

- Engage with practical tools and strategies to promote reproducible research practices

2.3 Online Resources

This list of online resources supports our group activities:

- Reproducibility and Replicability in Science - A report published by the National Academies of Sciences, Engineering, and Medicine.

- Retraction Watch - Tracking retractions as a window into the scientific process.

- Retraction Watch Database - The database associated with Retraction Watch.

- PubPeer - An online journal club where researchers are posting comments on articles.

- PubMed - This database comprises more than 39 million citations for biomedical literature from MEDLINE, life science journals, and online books. Citations may include links to full text content from PubMed Central and publisher web sites.

- RESCIENCE C - Reproducible Science is good. Replicated Science is better.

- Peer Review Week - Peer Review Week is a community-led yearly global virtual event celebrating the essential role that peer review plays in maintaining research quality. The event brings together individuals, institutions, and organizations committed to sharing the central message that quality peer review in whatever shape or form it may take is critical to scholarly communication.

- Research Integrity and Peer Review - An international, open access, peer reviewed journal that encompasses all aspects of integrity in research publication, including peer review, study reporting, and research and publication ethics.

- Tools such as ChatGPT threaten transparent science; here are our ground rules for their use - A Nature editorial discussing the inclusion of AI in research articles.

2.4 What is Reproducible Science?

To investigate this question, students engage in self-reflection and group activities designed to explore the meaning of reproducible science and its implementation in research and scientific publications.

Disclaimer: The instructor has provided definitions and other supporting materials (in collapsible boxes) throughout this section. However, students are advised not to read these materials in advance, in order to complete the exercises sincerely and derive the greatest benefit from the experience.

2.4.1 Class Outline

The structure of this class, held over two sessions, is as follows:

- Introduction and Definitions

- Case Studies and Challenges

- Best Practices and Open Science Tools

- Wrap-up and Future Directions

The first two sections will be covered during Session 1, while the remaining sections will be addressed in Session 2.

Please ensure that you have identified the reproducibility challenges in your assigned publication (see Case Studies and Challenges) prior to attending the second session.

2.4.2 Introduction and Definitions

2.4.2.1 ✍️ Individual Self-Reflection (5 minutes)

Students reflect on what “reproducible science” means to them and write down a personal definition.

2.4.2.2 🤝 Group Sharing and Discussion (15 minutes)

- Form small groups (3–4 students)

- Small groups share and compare their definitions

- Each group presents insights to the class to foster open discussion

2.4.2.3 📘 Formal Definitions (10 minutes)

Standard definitions are introduced to clarify the distinction between reproducibility and replicability.

Students are invited to relate them to their own research experience.

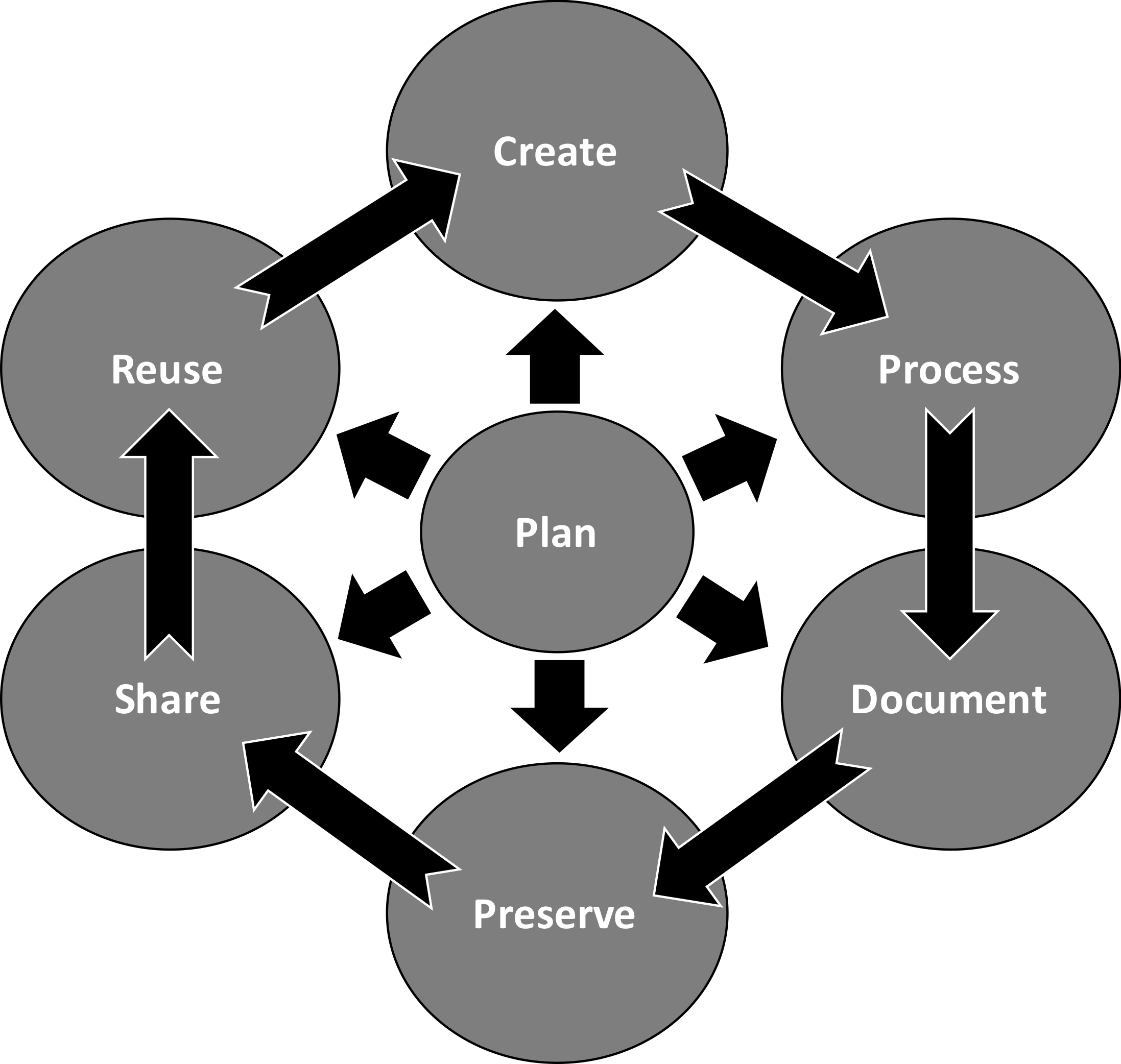

📌 What is Reproducible Science?

Reproducible science means achieving the same findings using the original data, methods, and code.

Key elements:

- Transparency – Open access to data, code, and methods.

- Documentation – Clear steps and research design explanation.

- Data Availability – Raw data is publicly available.

- Code Availability – Scripts are shared and well-commented.

- Standardized Practices – Use of trusted tools and workflows.

🔄 Reproduction vs. Replication

Reproduction = Running the same code on the same data to verify results (like software testing for an entire study).

Replication = Repeating the study with intentional variations (e.g., different species, software, or parameters) to test if conclusions hold.

- Reproduction confirms that the research is traceable and correctly recorded.

- Replication tests what aspects of a study matter for the results to hold.

Key insight: Replication is most useful after reproduction is verified.

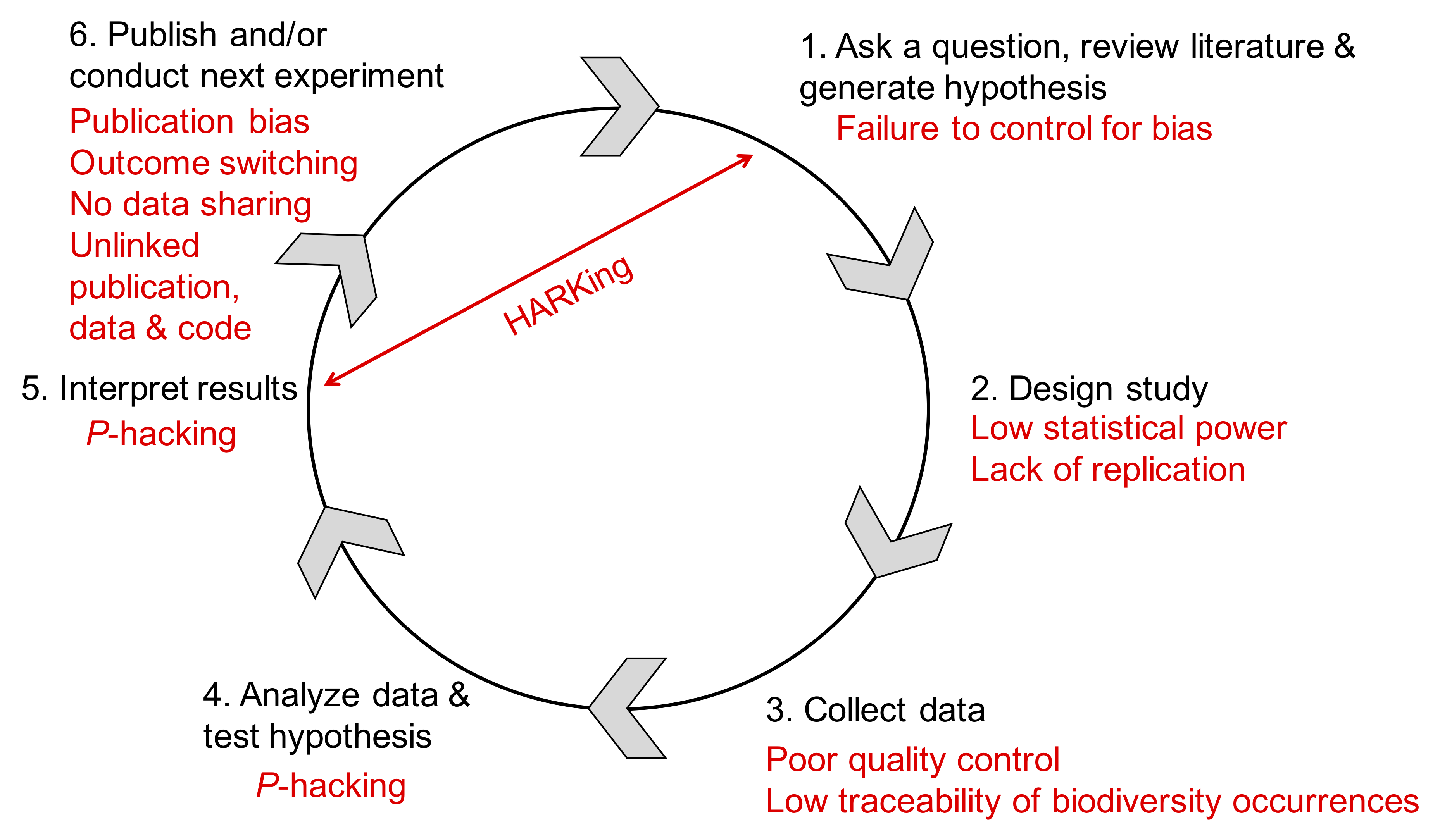

2.4.3 Case Studies and Challenges

2.4.3.1 👥 Group Work Activity (50 minutes)

Each group is given a real-world publication that illustrates challenges to reproducibility, such as:

- 🔒 Lack of data/code sharing

Data and code are not made available, preventing verification and reuse. - 🧩 Incomplete methodological detail

Missing or vague descriptions of protocols, tools, or parameters hinder reproducibility. - 📉 Poor statistical practices

Misuse or misunderstanding of statistical tests can lead to invalid conclusions. - 🎯 Publication bias

Preference for positive or novel results skews the scientific record and limits replication efforts. - 🤖 Unclear or unvalidated use of AI tools

Use of AI (e.g., large language models, predictive algorithms) without documenting models, prompts, parameters, or validation steps reduces transparency and reproducibility. - 🧪 Data manipulation or selective reporting

Deliberate or unintentional exclusion, alteration, or cherry-picking of data that misrepresents findings.

The instructor will hand out copies of the publications, but they are also available on our Google Drive.

📝 Task Instructions:

Each group:

- Read their assigned publication

- Identify specific reproducibility challenges

- Discuss how these challenges might impact the broader research community

- Brainstorm strategies or solutions to mitigate these issues and improve reproducibility

💡 Note: Students are encouraged to explore their assigned study online and consult the provided resources.

However, please form your own conclusions before researching externally.

2.4.3.2 📢 Group Presentations & Class Discussion (20 minutes)

- Each group presents:

- The reproducibility challenges in their case study

- Proposed solutions or best practices to address them

- 🧭 Facilitated Discussion Tip:

Guide the discussion by drawing links across different case studies — uncover systemic challenges and the interconnected factors that shape reproducibility across disciplines.

2.4.3.3 Reasons Behind the Retractions of Case Studies (10 minutes)

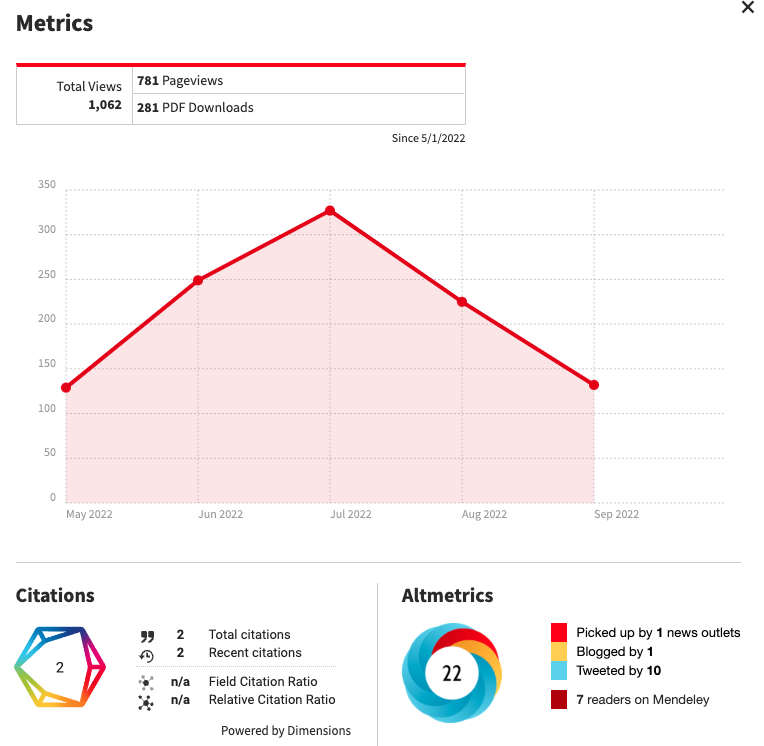

The data supporting the retractions of the case studies are presented in Table 2.1 (sourced from Retraction Watch). Digital Object Identifiers (DOI) and PubMed IDs are provided for each case study.

Note that the article assigned to Group 3 has not been formally retracted; however, several experts have raised concerns on PubPeer. These concerns relate to: (i) the use of machine-generated passages, (ii) the inclusion of references that do not appear to exist, and (iii) references to figures in the article that are themselves nonexistent.

| GroupID | Title | Journal | ArticleType | RetractionDOI | RetractionPubMedID | OriginalPaperDOI | OriginalPaperPubMedID | Reason |

|---|---|---|---|---|---|---|---|---|

| 1 | Primary Prevention of Cardiovascular Disease with a Mediterranean Diet | NEJM: The New England Journal of Medicine | Research Article; | 10.1056/NEJMc1806491 | 29897867 | 10.1056/NEJMoa1200303 | 23432189 | +Error in Analyses;+Error in Methods;+Error in Results and/or Conclusions;+Retract and Replace; |

| 2 | Hydroxychloroquine and azithromycin as a treatment of COVID-19: results of an open-label non-randomized clinical trial | International Journal of Antimicrobial Agents | Clinical Study; | 10.1016/j.ijantimicag.2024.107416 | 39730229 | 10.1016/j.ijantimicag.2020.105949 | 32205204 | +Concerns/Issues About Authorship/Affiliation;+Concerns/Issues About Data;+Concerns/Issues about Results and/or Conclusions;+Concerns/Issues about Article;+Concerns/Issues about Human Subject Welfare;+Date of Article and/or Notice Unknown;+Informed/Patient Consent - None/Withdrawn;+Investigation by Journal/Publisher;+Investigation by Third Party; |

| 4 | Correlation of Carotid Artery Intima-Media Thickness with Calcium and Phosphorus Metabolism, Parathyroid Hormone, Microinflammatory State, and Cardiovascular Disease | BioMed Research International | Research Article; | 10.1155/2024/9893064 | 38550095 | 10.1155/2022/2786147 | 35313627 | +Computer-Aided Content or Computer-Generated Content;+Concerns/Issues About Data;+Concerns/Issues about Referencing/Attributions;+Concerns/Issues about Results and/or Conclusions;+Concerns/Issues with Peer Review;+Investigation by Journal/Publisher;+Investigation by Third Party;+Paper Mill;+Unreliable Results and/or Conclusions; |

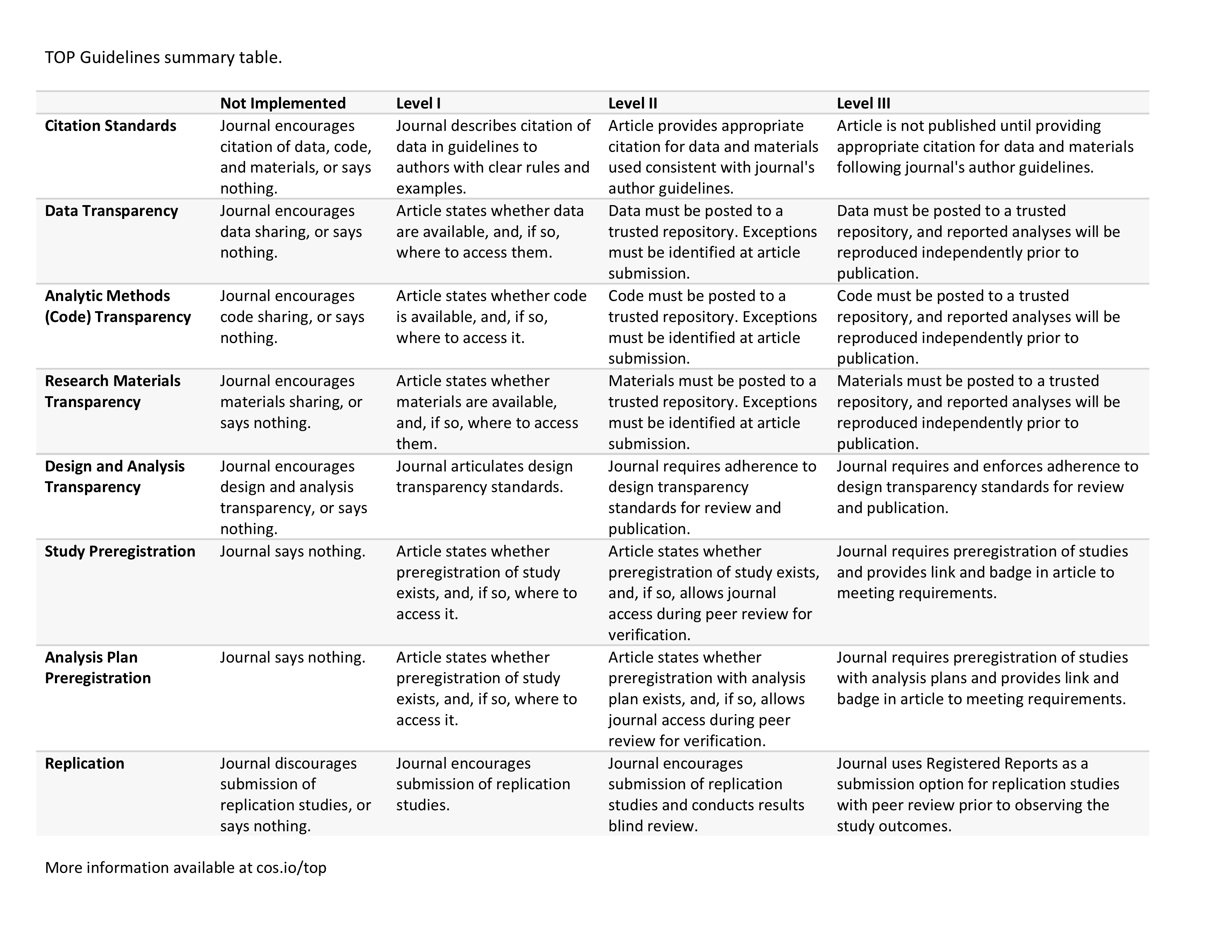

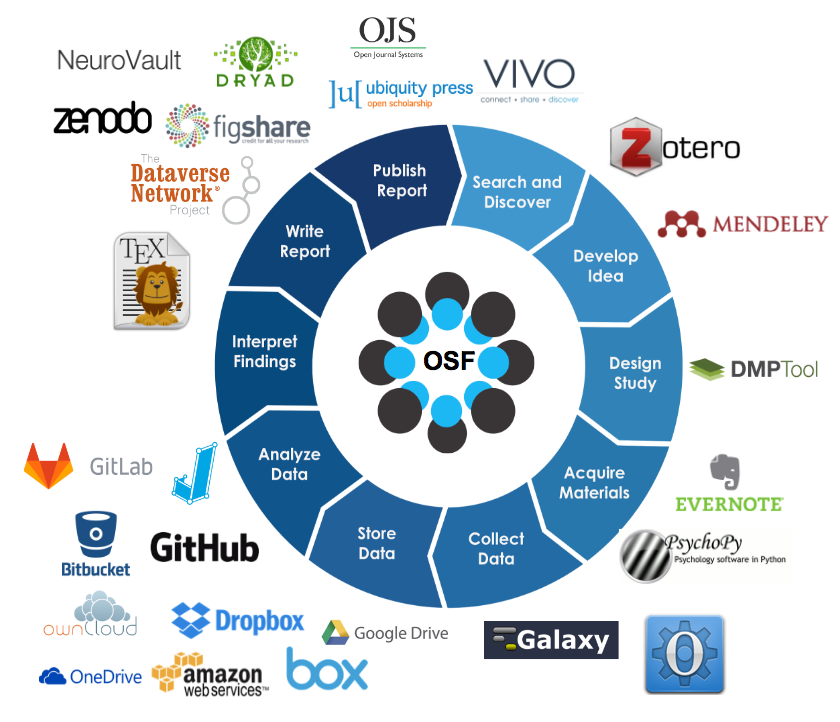

2.4.4 Best Practices and Open Science Tools

2.4.4.1 🧠 Group Brainstorm (20 minutes)

In small groups, students:

- Identify best practices and open science tools that enhance research reproducibility

- Draw from earlier discussions and real-world case studies

Afterward, we will share and compare our recommendations as a class.

2.4.4.2 🚀 Key Strategies & Tools (10 minutes)

The instructor goes over the points in this collapsible box to further discuss implementing reproducible science in your research. Please don’t look at the content of the box before we get here!

✅ Strategies and tools for reproducible science

- Detailed Protocols: Importance of clear and precise documentation of all steps leading to data production.

- Lab Notebooks: For improved documentation and data management.

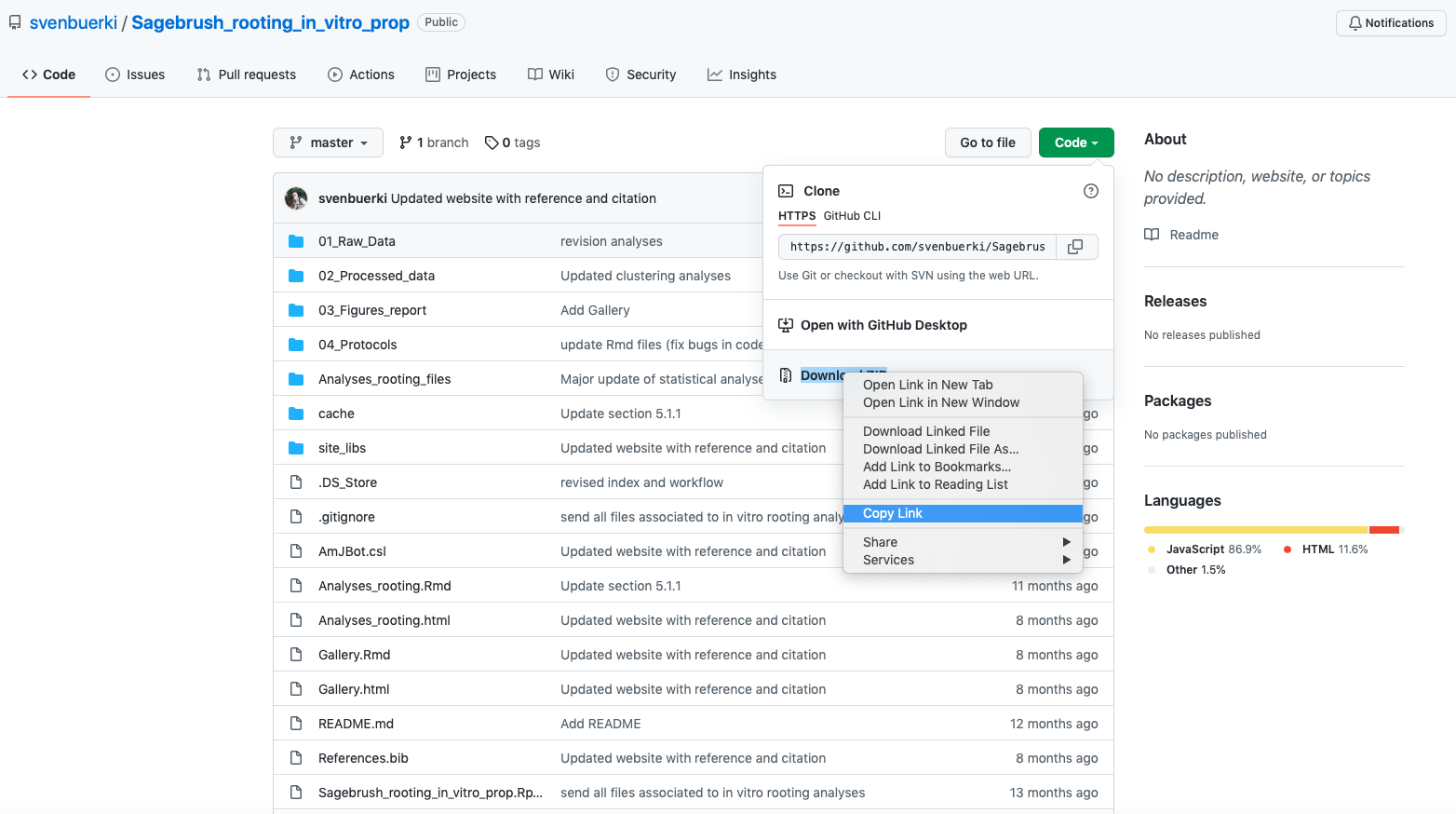

- Version Control: Using tools like Git for code and data management.

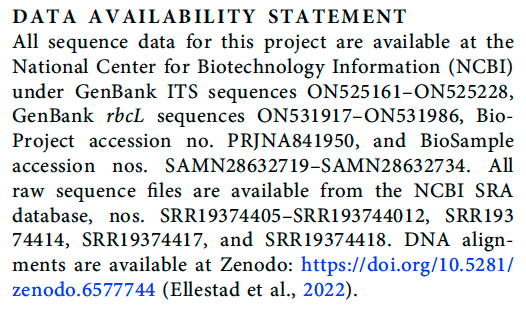

- Data and Code Repositories: Platforms like Dryad, Zenodo, and Figshare for sharing research materials.

2.5 What Factors Break Reproducibility?

2.5.1 Overview

In Part B, we are further investigating the causes leading to irreproducible science and discussing ways to mitigate this crisis. For this purpose, we are using results from the survey published by Baker (2016) and recommendations from the report published by the National Academies of Sciences, Engineering, and Medicine (National Academies of Sciences and Medicine, 2019).

2.5.2 Investigated Questions

We will be investigating the following questions:

- Is there a reproducibility crisis?

- How much published work in your field is reproducible?

- What is the scale of the reproducibility crisis?

- What corrective measures can be implemented?

- What factors contribute to irreproducible research?

- What can be done to mitigate the reproducible crisis?

2.5.3 Resources

The PDFs of Baker (2016) and National Academies of Sciences and Medicine (2019) are available in our shared Google Drive at the following paths:

Reproducible_Science > Publications > Baker_Nature_2016.pdf

Reproducible_Science > Publications > Reproducibility_and_Replicability_in_Science_2019.pdf

2.5.4 Teaching Material

The presentation associated with this class is available here:

2.5.5 Recommendations

The most relevant recommendations proposed by the National Academies of Sciences and Medicine (2019) for our course are listed in the table below.

3 Chapter 2

3.1 Introduction

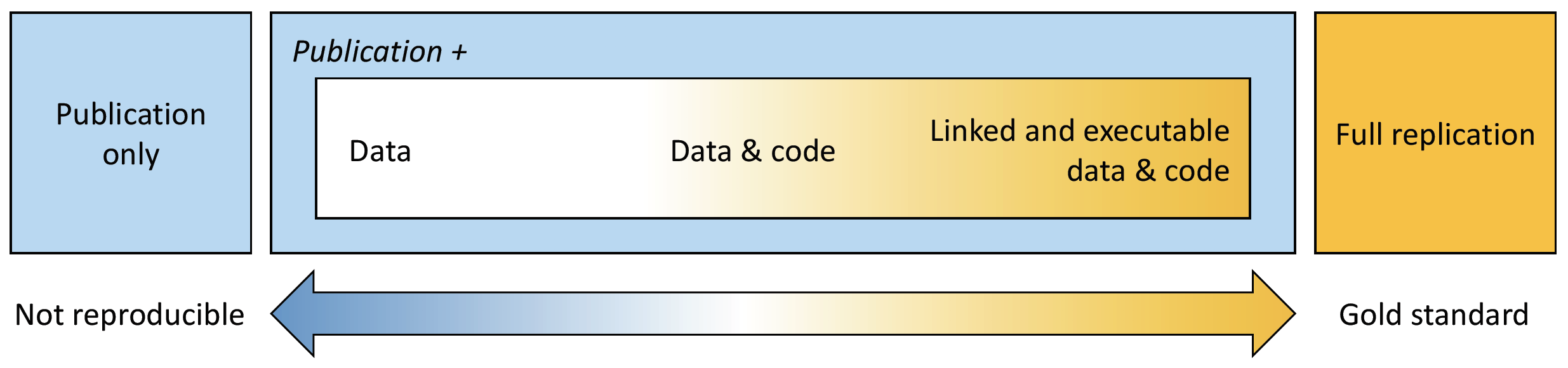

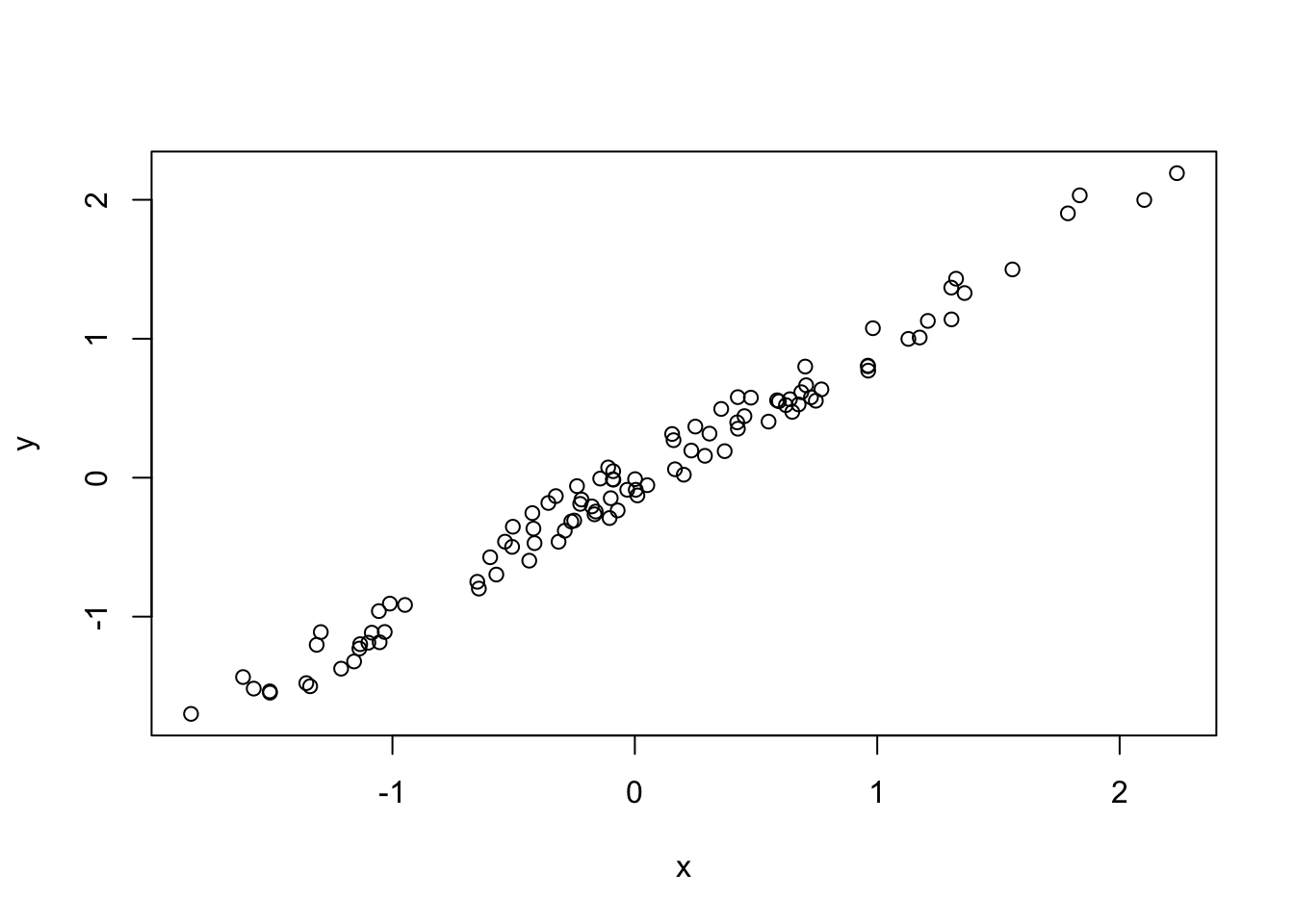

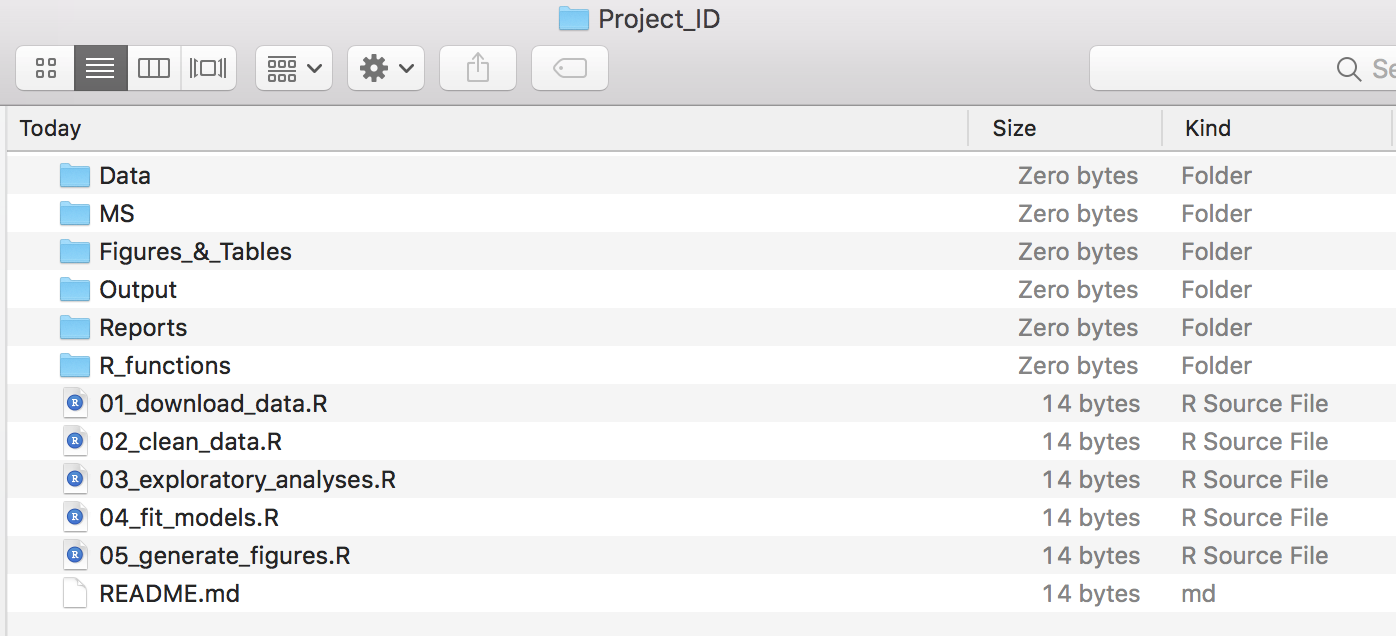

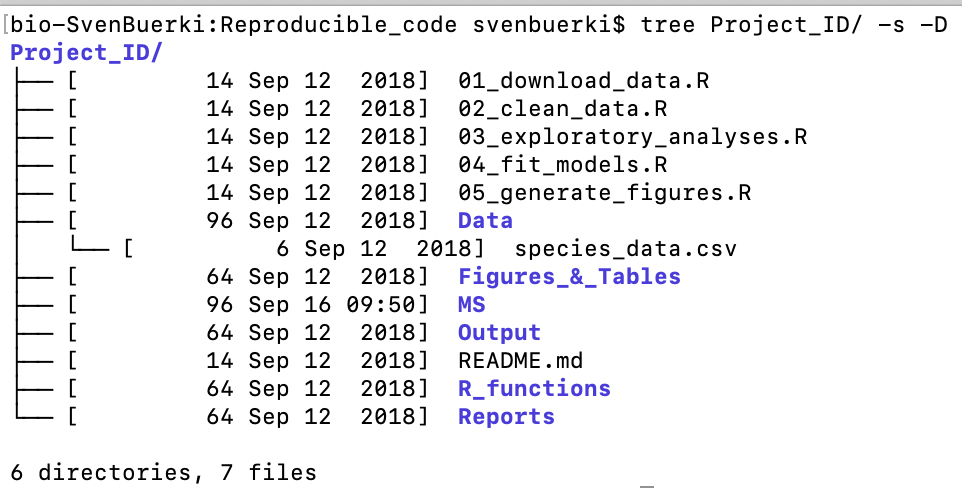

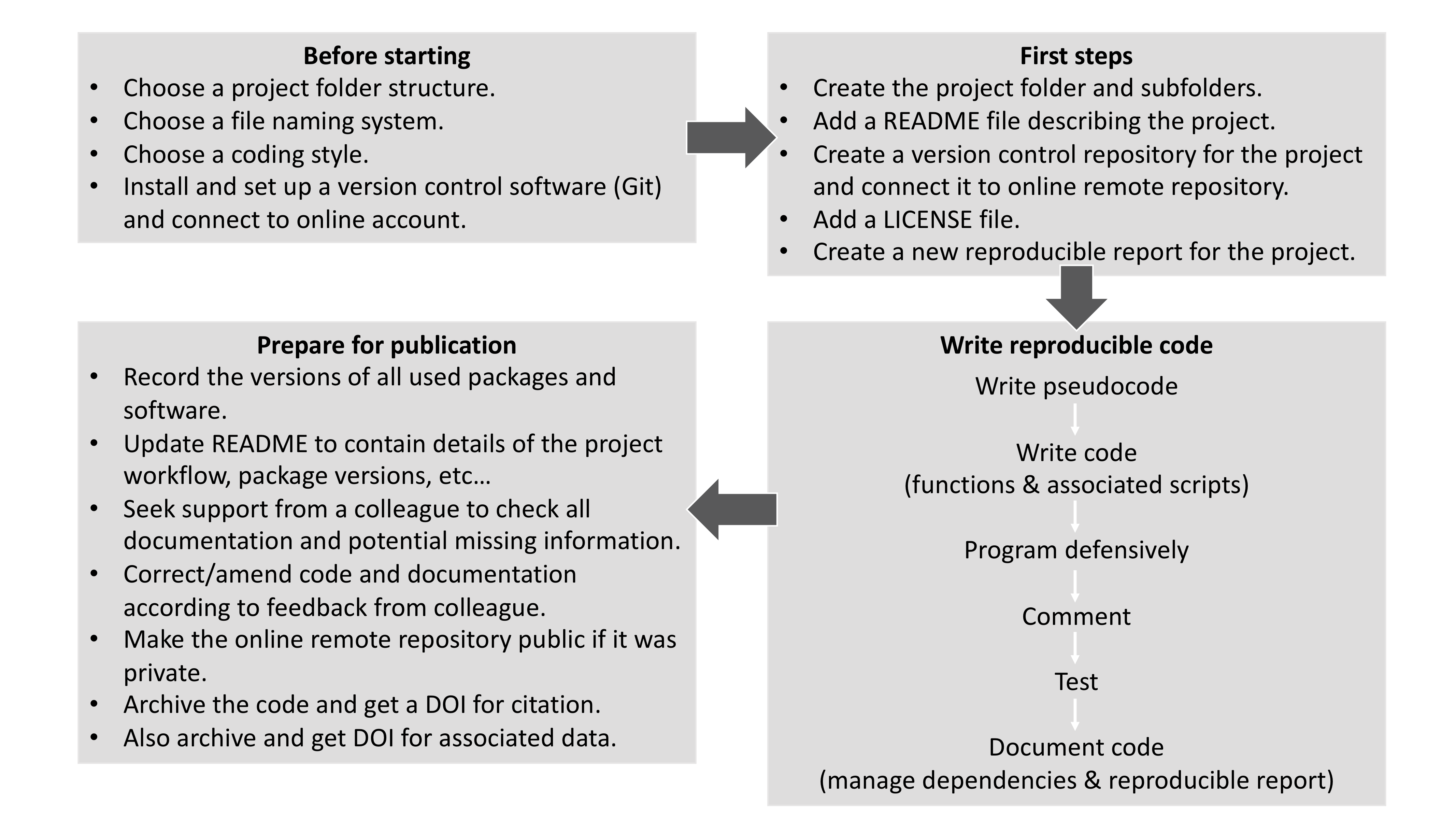

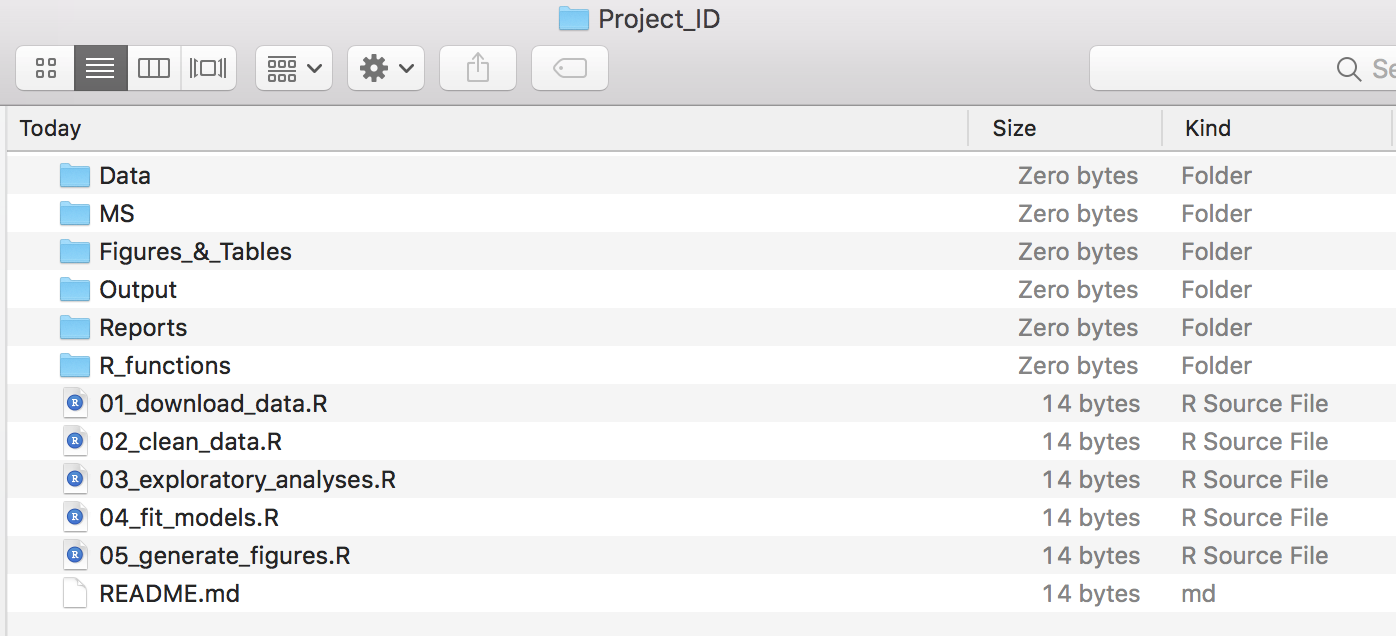

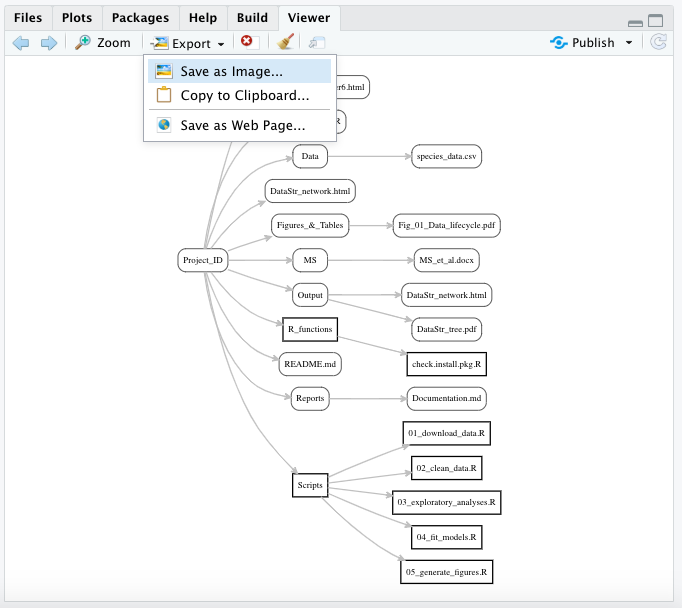

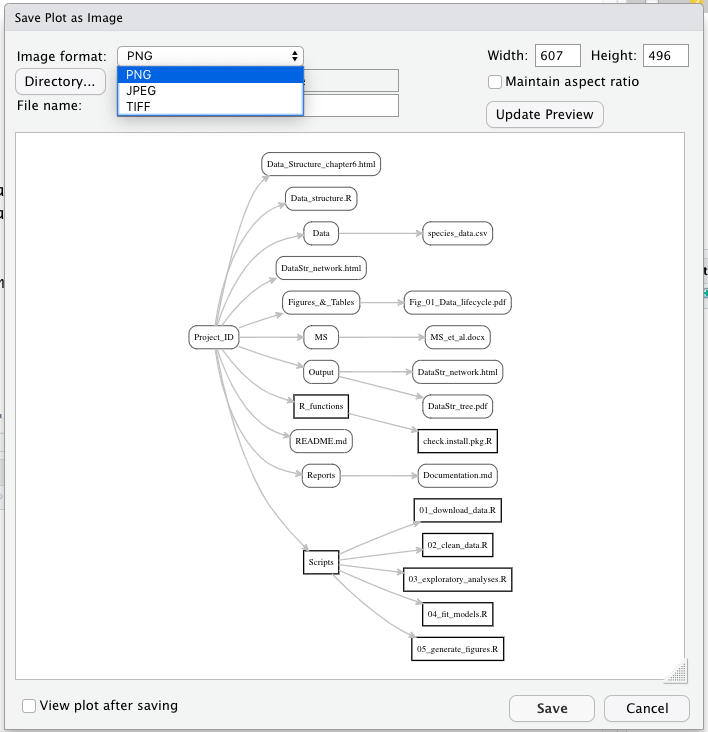

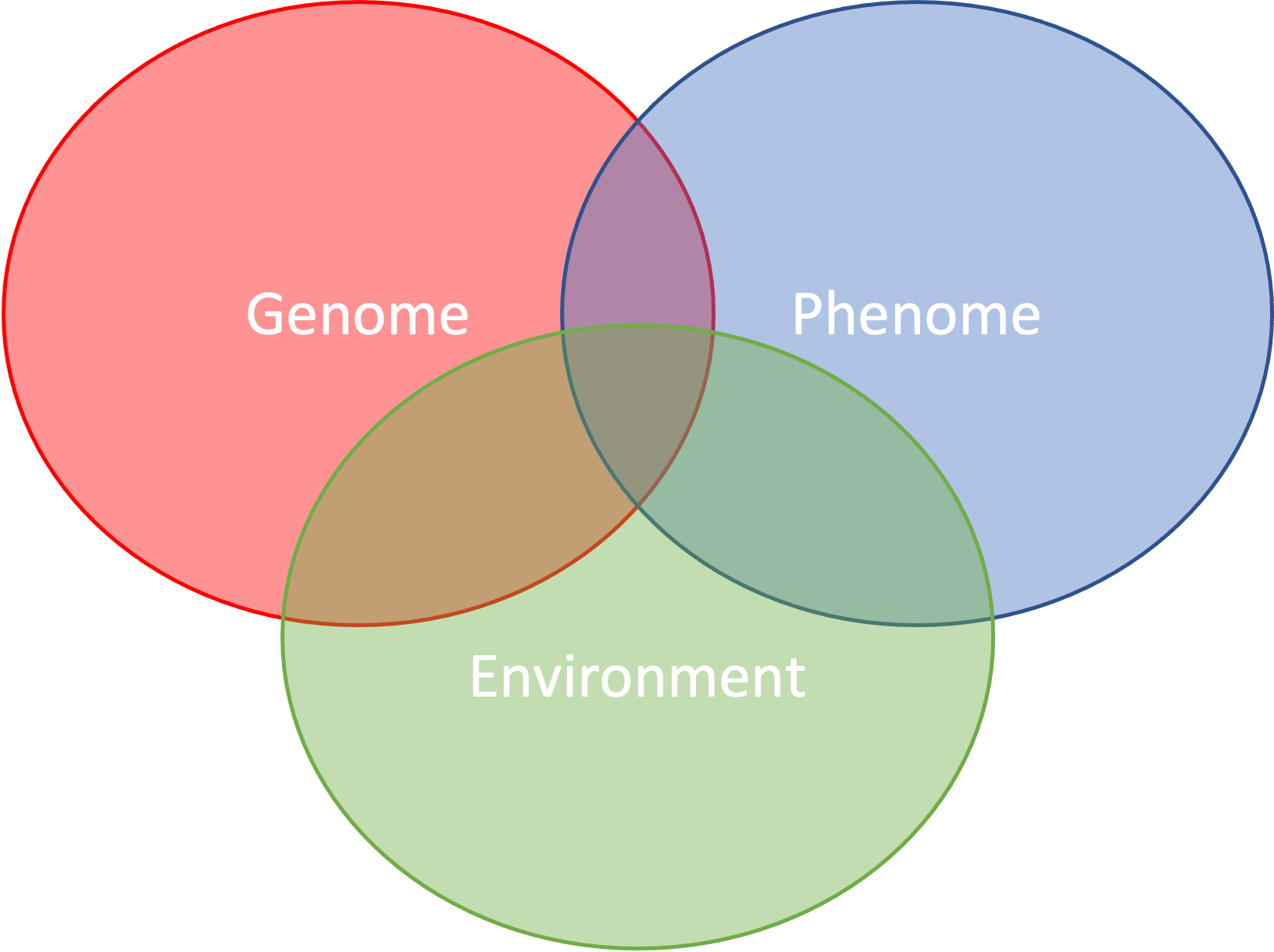

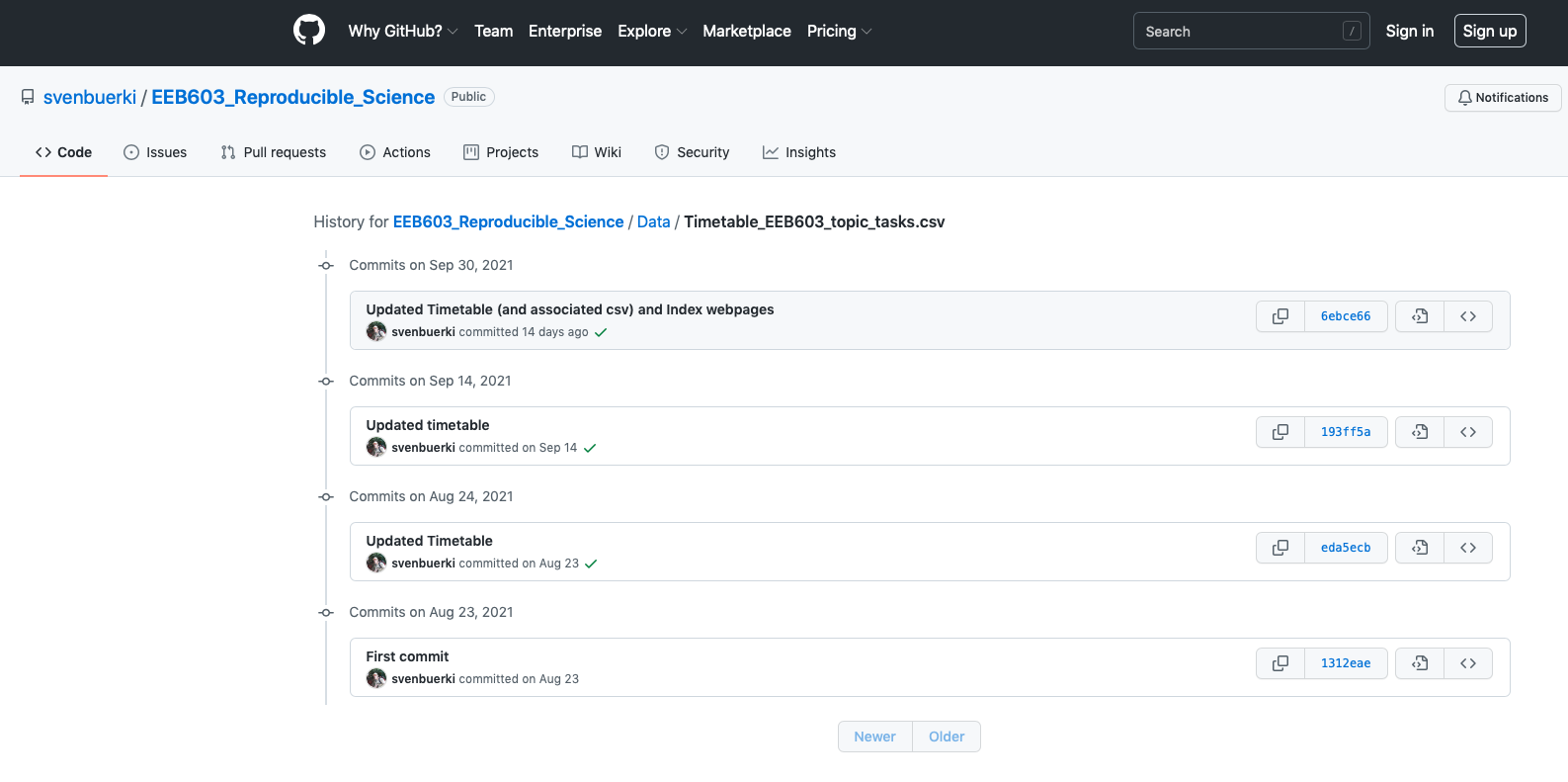

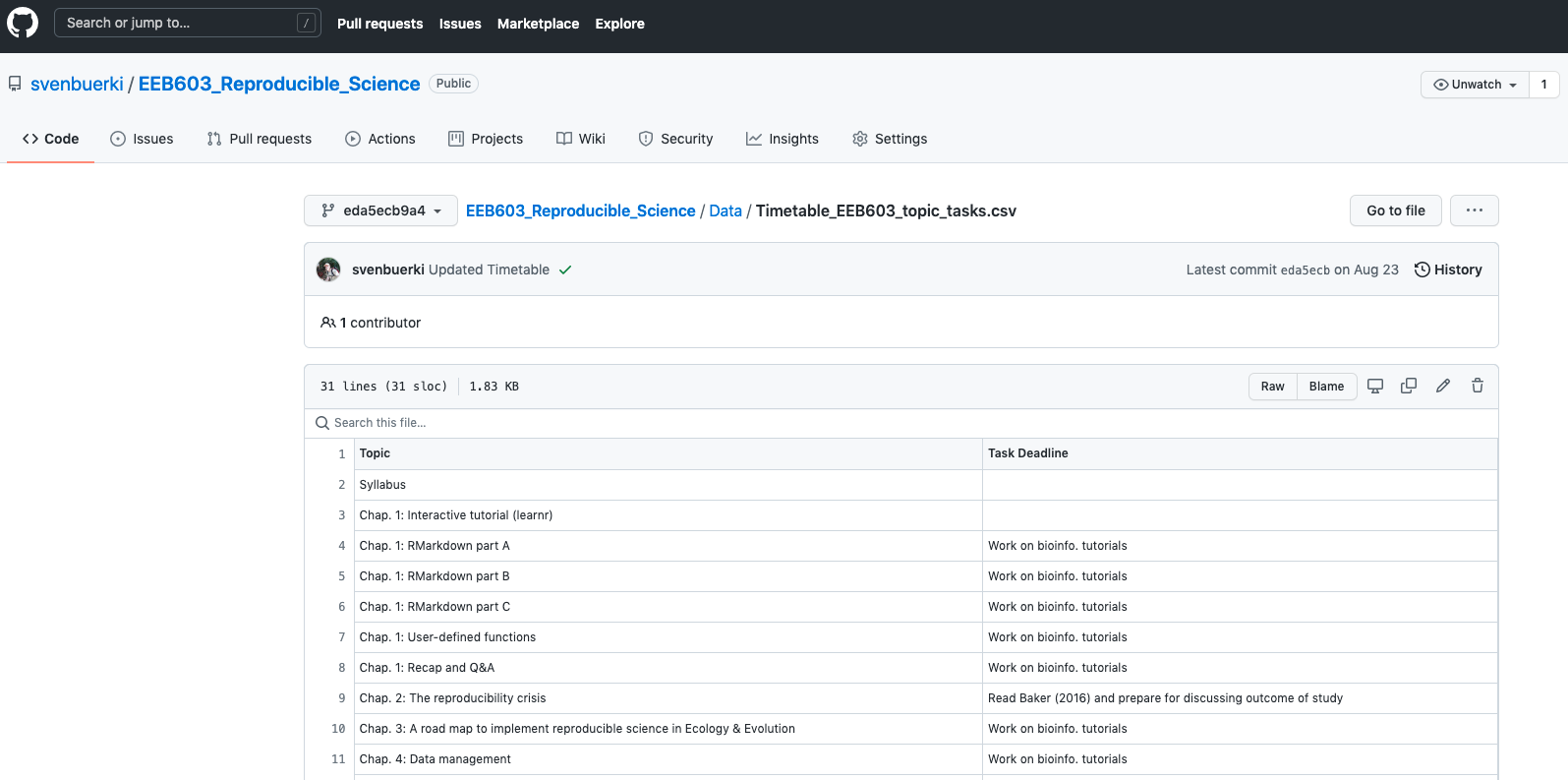

In this chapter, we introduce the use of bioinformatics tools for writing and disseminating reproducible reports, as implemented in RStudio (RStudio Team, 2020). More specifically, we will learn how to link and execute data and code within a unified environment (see Figure 3.1).

3.1.1 Aim

This chapter aims to equip students with the essential skills required to effectively use R Markdown for integrating text, code, figures, tables, and references into a cohesive, reproducible document. The final output can be rendered into multiple formats—including PDF, HTML, or Word—to streamline the communication and sharing of research findings.

This tutorial provides students with the foundational knowledge necessary to complete their bioinformatics tutorials (PART 2) and individual projects (PART 3).

3.1.2 Objectives

The chapter is divided into six parts, each with specific learning objectives:

- PART A: Learning the Basics

- PART B: Setting Your R Markdown Document

- PART C: Tables, Figures, and References

- PART D: Advanced R Markdown Settings

- PART E: User-Defined Functions in R

- PART F: Interactive Tutorials

Figure 3.1: The spectrum of reproducibility.

3.1.3 Supporting Files

Files supporting this chapter are available on Google Drive.

3.1.4 Install R Markdown Software

Software and packages required to perform this tutorial are detailed below. Students should install those software and packages on their personal computers to be able to complete this course. Additional packages might need to be installed and the instructor will provide guidance on how to install those as part of the forthcoming tutorials.

- R: https://www.r-project.org

- R packages:

bookdown,knitrandR Markdown. Use the following R command to install those packages:

install.packages(c("bookdown", "knitr", "rmarkdown"))- RStudio: https://www.rstudio.com/products/rstudio/download/

- TeX: This software is required to compile document into

pdfformat. Please install MiKTeX on Windows, MacTeX on OS X and TeXLive on Linux. For this class, you are not requested to install this software, which takes significant hard drive space and is harder to operate on Windows OS.

NOTE: The instructor is using the following version of RStudio: Version 2025.05.0+496 (2025.05.0+496). If your computer is experiencing issues running the latest version of the software, you can install previous versions here.

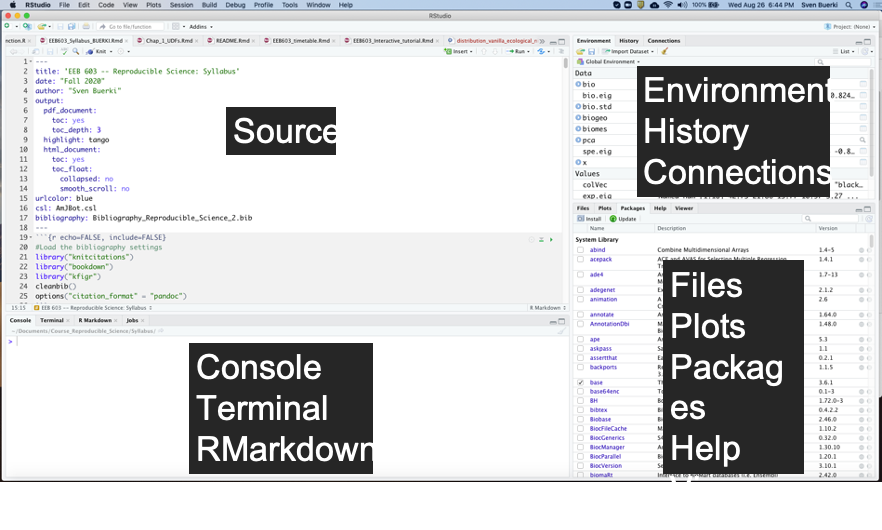

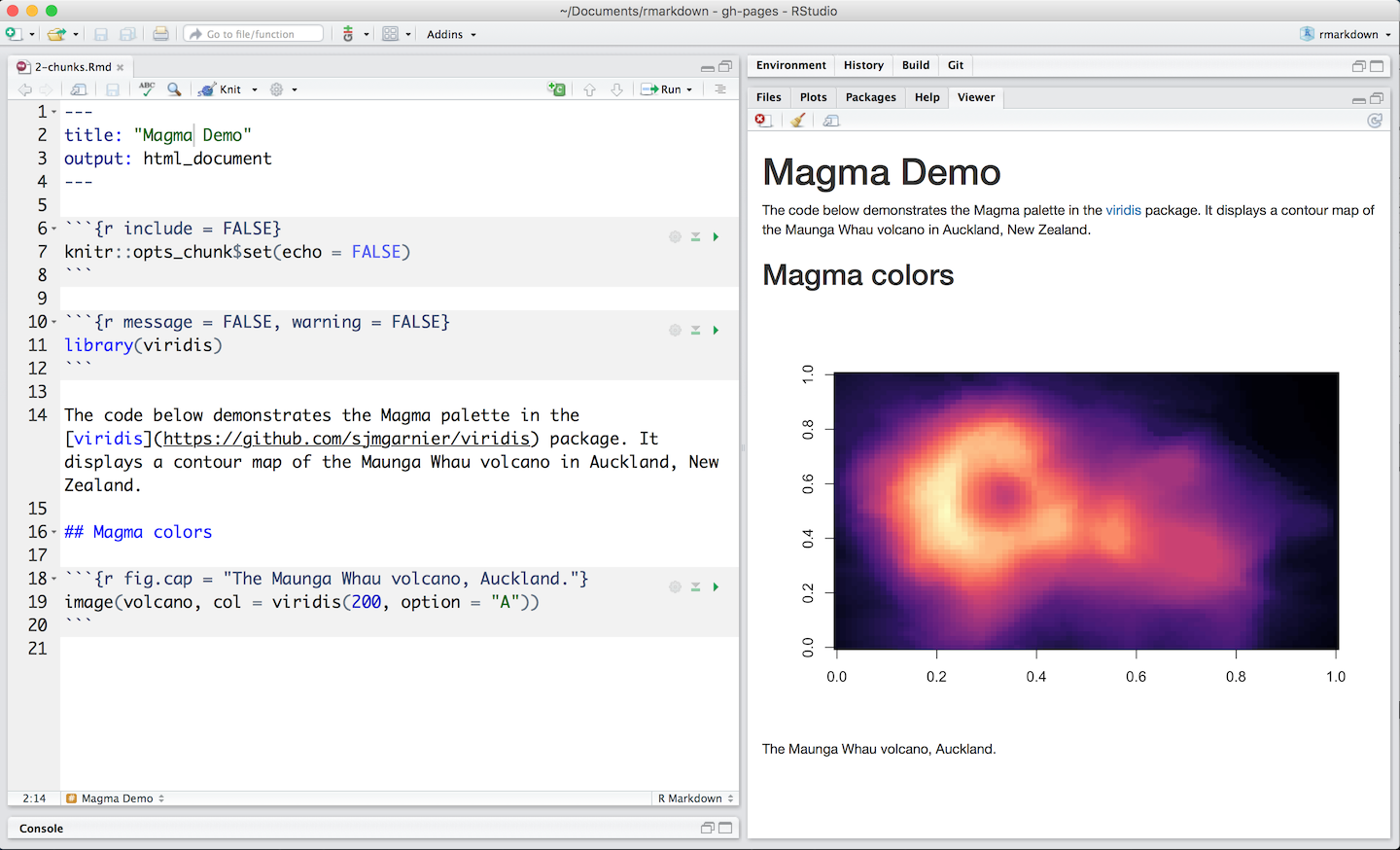

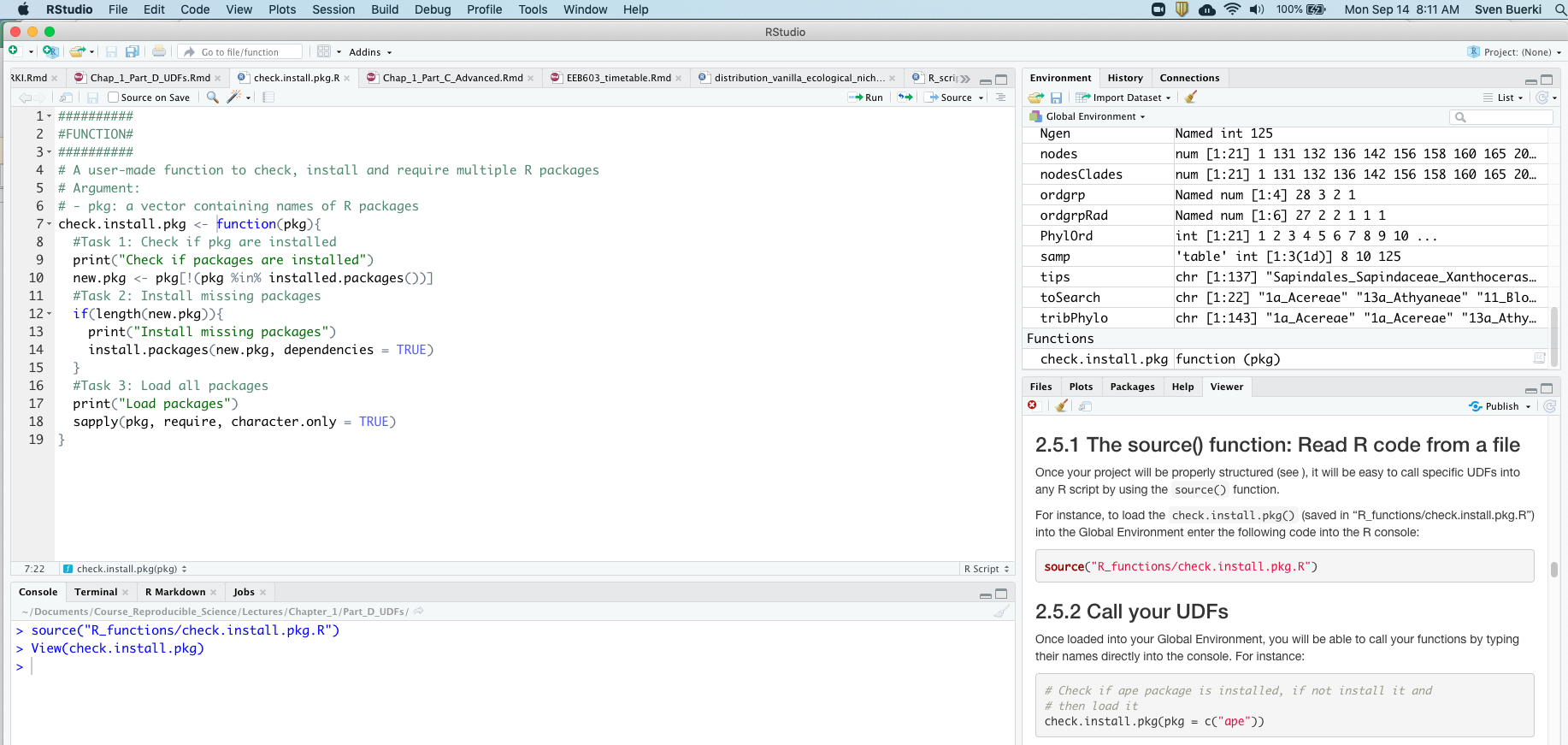

3.1.5 What is RStudio?

RStudio (RStudio Team, 2020) is an integrated development environment (IDE) that allows you to interact with R more efficiently. While similar to the standard R GUI, RStudio is significantly more user-friendly. It offers more drop-down menus, tabbed windows, and extensive customization options (see Figure 3.2).

Detailed information on using RStudio can be found on RStudio’s website.

Figure 3.2: Snapshot of the RStudio environment showing the four windows and their content.

3.1.6 Web Resources

Below are URLs to web resources that provide key information related to Chapter 2:

- R Markdown: https://rmarkdown.rstudio.com

- R Markdown: The Definitive Guide (by Yihui Xie, J. J. Allaire, and Garrett Grolemund): https://bookdown.org/yihui/rmarkdown/

- Write HTML, PDF, ePub, and Kindle books with R Markdown: https://bookdown.org

- bookdown: Authoring Books and Technical Documents with R Markdown (by Yihui Xie): https://bookdown.org/yihui/bookdown/

- knitr: http://yihui.name/knitr/

- Pandoc: A Universal Document Converter: https://pandoc.org

- Bibliographies and Citations in R Markdown: https://rmarkdown.rstudio.com/authoring_bibliographies_and_citations.html

- Tutorial on knitr with R Markdown (by Karl Broman): http://kbroman.org/knitr_knutshell/pages/RMarkdown.html

3.2 PART A: Learning the Basics

In this part, we will provide a survey of the procedures to create and render (or knitting) your first R Markdown document.

3.2.1 Learning Outcomes

This tutorial provides students with the opportunity to learn how to:

- Create and render (or “knit”) your first R Markdown document

- Understand basic Markdown syntax and conventions, including:

3.2.2 Introduction to R Markdown

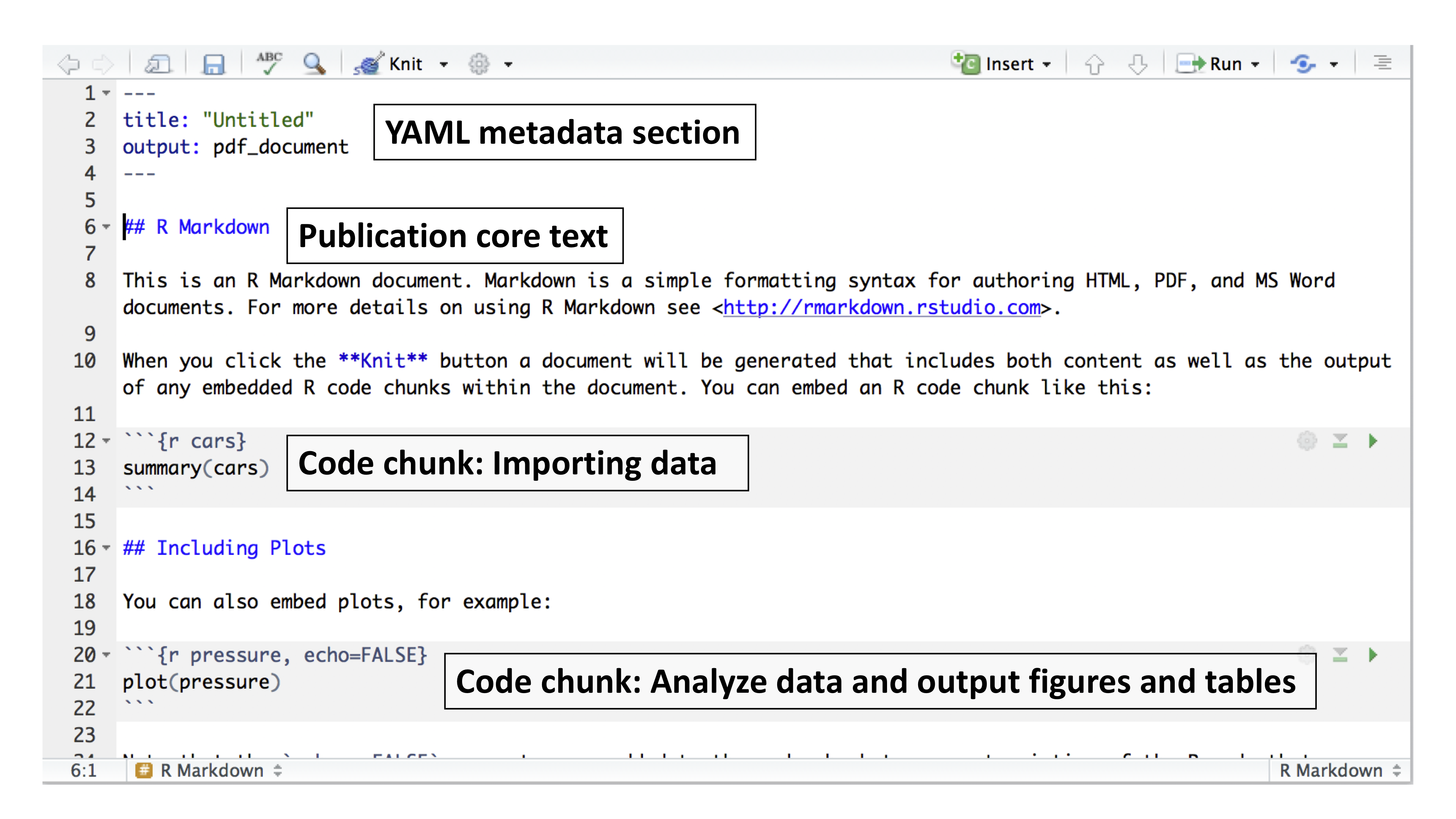

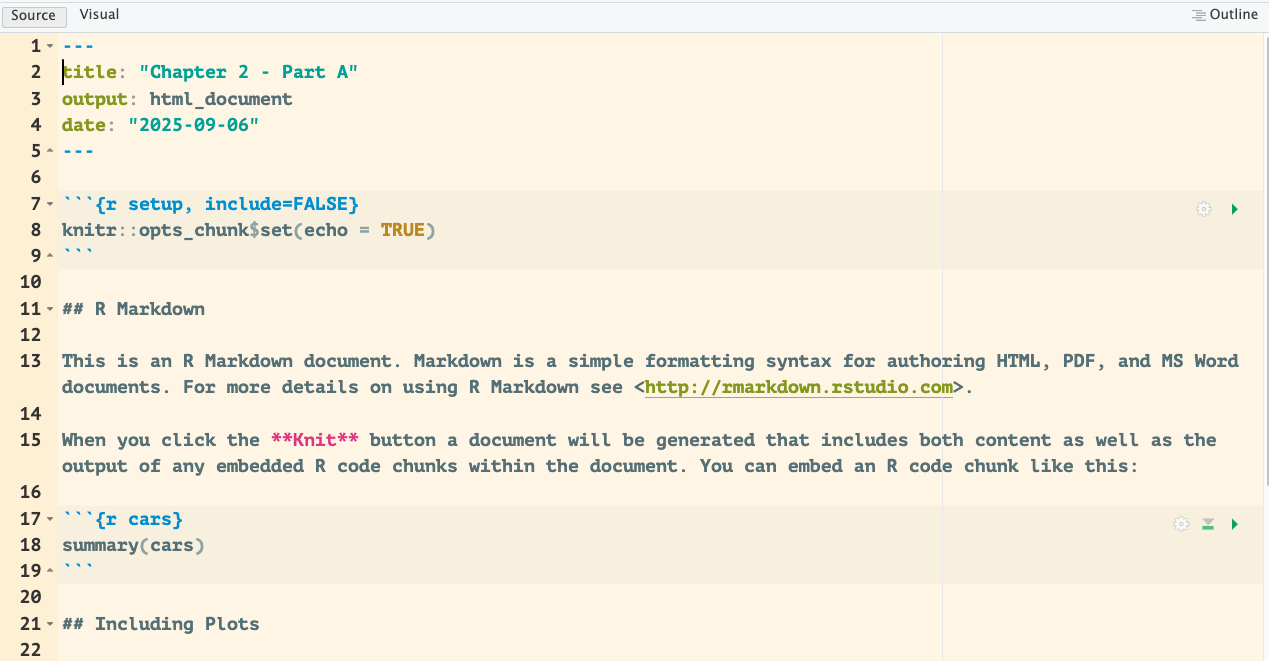

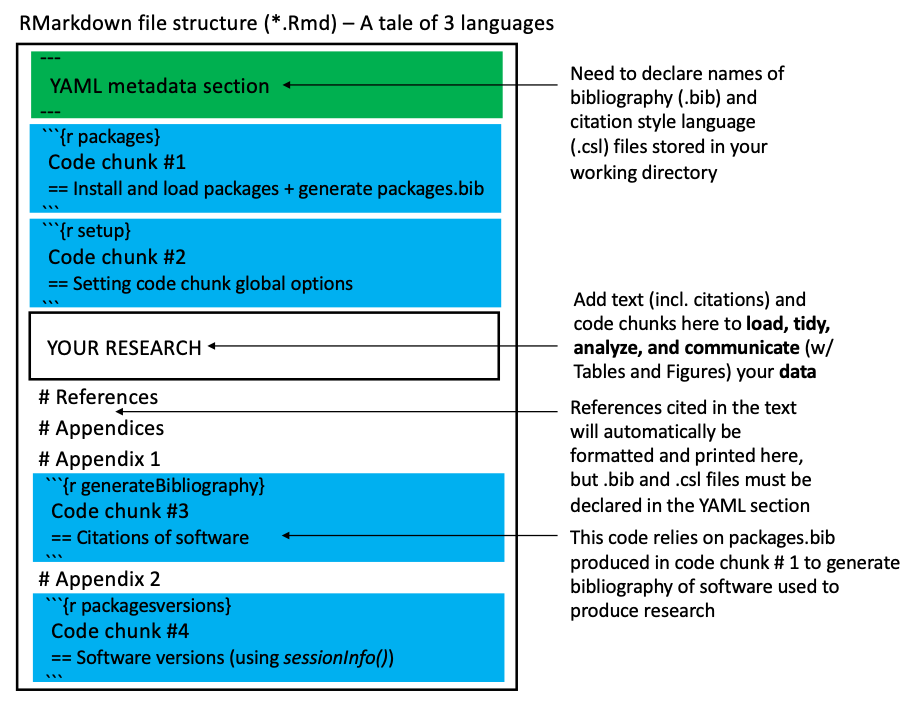

Markdown is a simple formatting syntax used for authoring HTML, PDF, and Microsoft Word documents. It is implemented in the rmarkdown package. An R Markdown document is typically divided into three sections (see Figure 3.3):

- YAML metadata section: This section provides high-level information about the output format of the R Markdown file. The information defined here is used by the Pandoc program to format the final document (see Figure 3.3).

- Main body text: This section represents the core content of your document or publication and uses Markdown syntax.

- Code chunks: This section allows you to import and analyze data, and to produce figures and tables that are directly embedded in the output document.

Figure 3.3: Example of an R Markdown file showing the three major sections.

3.2.3 Your First R Markdown Document

In this section, you will learn how to create and knit your first R Markdown document.

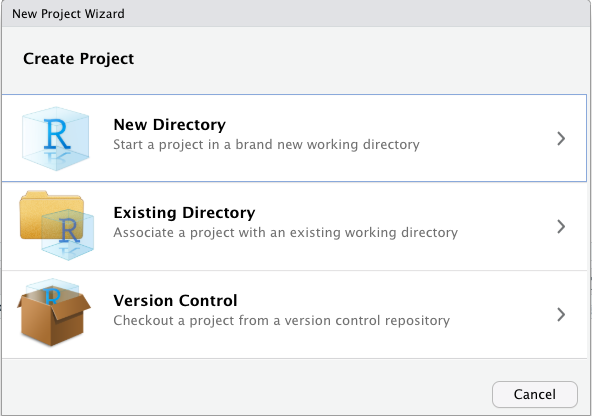

3.2.3.1 Create Document

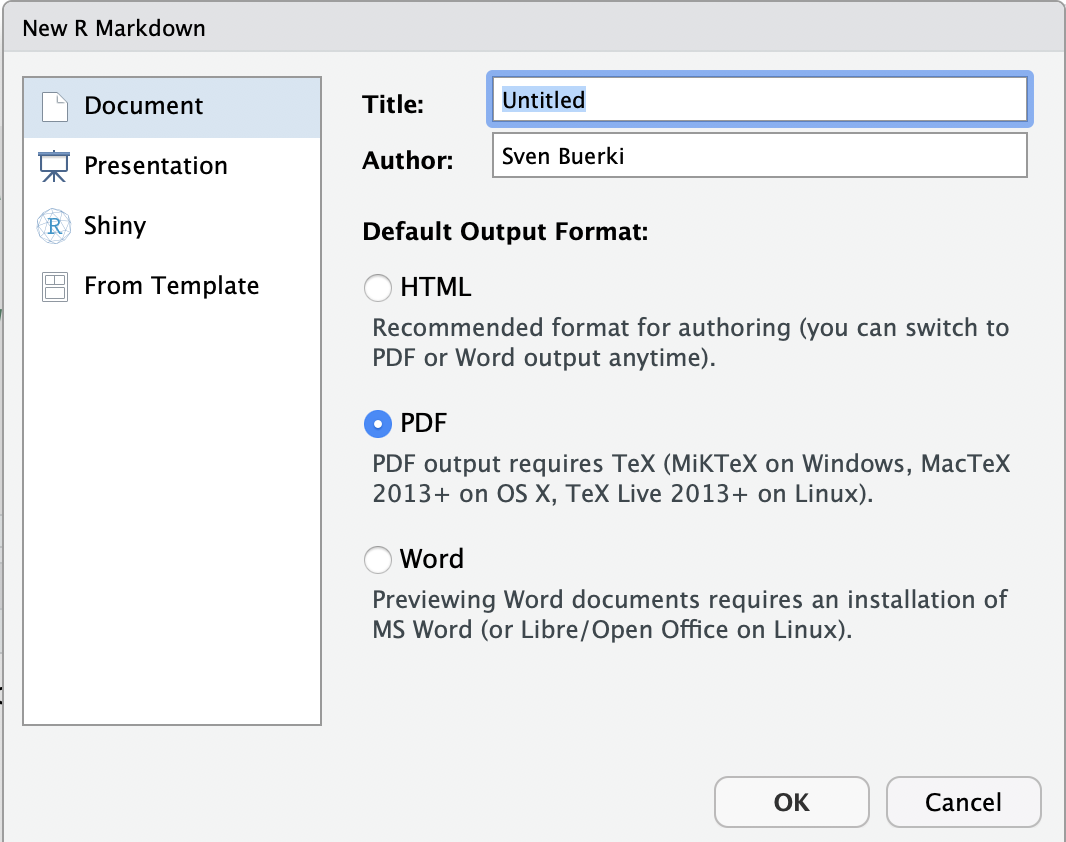

To create an R Markdown document, follow these steps in RStudio:

- Navigate to:

File -> New File -> R Markdown...

- Enter a title for your document and set the output format to

HTML(see Figure 3.4)- Title:

Chapter 2 - Part A(This is the title of your document, not the name of your file; see below) - Note: We will knit documents to the

HTMLformat, which is the default output in RStudio. If you prefer to generate aPDFdocument, you must have a version of theTeXprogram installed on your computer (see Figure 3.4).

- Title:

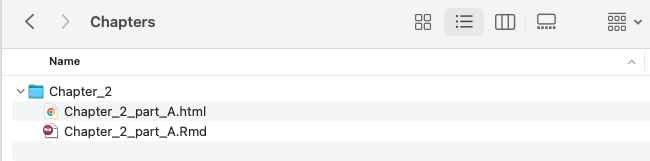

- Save the

.Rmdfile by selecting:File -> Save As...- File name:

Chapter_2_part_A.Rmd

- Project path:

Reproducible_Science/Chapters/Chapter_2

- Warning: Because the knitting process generates multiple files, you should save your

.Rmdfile in a dedicated project folder.

- File name:

Figure 3.4: Snapshot of window to create an R Markdown file.

3.2.3.2 Knit Document

To knit  or render your R Markdown document into an HTML file, follow these steps in RStudio:

or render your R Markdown document into an HTML file, follow these steps in RStudio:

- Click the

Knitbutton (Figure 3.3) in the top toolbar to render the document.

- There are several output format options; however, if you simply click the button, RStudio will automatically knit the document using the settings specified in the YAML metadata section (Figure 3.3).

- The output file will be created in the same directory as your

.Rmdfile (Figure 3.5). You can monitor the progress in the R Markdown console.

💡 Info If the knitting process fails, error messages will appear in the Render console, often indicating the line in the script where the error occurred (although this is not always the case). These messages are helpful for debugging your R Markdown document.

Figure 3.5: Snapshot of your project folder with the knitted html document.

3.2.3.3 How Does the Knitting Process Work?

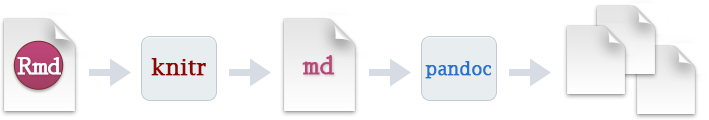

When you knit your document, R Markdown passes the .Rmd file to the R knitr package, which executes all code chunks and generates a new Markdown (.md) file. This Markdown file includes both the code and its output (see Figure 3.6).

The .md file created by knitr is then processed by the Pandoc program, which converts it into the final output format (e.g., HTML, PDF, or Word) as illustrated in Figure 3.6.

Figure 3.6: R Markdown flow.

3.2.4 Basic R Markdown Syntax

In this section, we will focus on learning the syntax and protocols needed to create:

- Headers

- Inside text commenting

- Lists

- Italicized, bold, and combined formatting

- Embedded code chunks and inline code

- Spell checking

To master these skills, edit your .Rmd file as we progress, and knit the document to view the final result.

Additional syntax and formatting options are available in the R Markdown Reference Guide, which you can access in RStudio as follows:

- Select:

Help -> Cheatsheets -> R Markdown Reference Guide

Note: The Cheatsheets section also provides access to other helpful resources related to R Markdown and data manipulation. These documents will be very useful throughout this course.

3.2.5 Before Starting

Before you start this tutorial, please delete the content of your R Markdown file starting on line 11 ## R Markdown to the end of the document (see Figure 3.7).

Figure 3.7: R Markdown of Chapter 2 - Part A.

3.2.6 Headers

Below is the syntax to create headers at three levels:

Syntax:

The "#" refers to the level of the header

# Header 1

## Header 2

### Header 3 💡 Info

- While Markdown headers will render correctly without blank lines, it is best practice to include blank lines both before and after headers. Doing so improves the readability of the raw Markdown and helps minimize knitting quirks. Overall, including a blank line before a header (except at the very start of a document) and after it is not strictly required, but it makes your Markdown cleaner and more consistent.

💡 Your turn to practice this syntax in your document by:

- Including 2 level 1 headers

- Embedding 2 level 2 headers under each level 1 header

- Checking your code by knitting the document

I let you pick great names for your headers!

3.2.7 Inside Text Commenting

Markdown does not have a built-in syntax for adding comments within text, but you can use HTML-style comments instead:

# HTML syntax to comment inside text

<!-- COMMENT -->This syntax is typically used to highlight areas of the text that need revision, clarification, or further work. Comments inserted this way will not be visible in the knitted document.

You can learn more about this HTML syntax on this webpage.

💡 Your turn to practice this syntax in your document by:

- Adding comments describing the content included under each level 1 header

- Checking your code by knitting the document

3.2.8 Lists

There are two types of lists:

- Unordered

- Ordered

3.2.8.1 Syntax for unordered lists

Syntax:

* unordered list

* item 2

+ sub-item 1

+ sub-item 2Note: For each sub-level include two tabs to create the hierarchy.

Output:

- unordered list

- item 2

- sub-item 1

- sub-item 2

💡 Your turn to practice this syntax in your document by:

- Including a 2-level unordered list under the first level 1 header

- Checking your code by knitting the document

3.2.8.2 Syntax for ordered lists

Syntax:

1. ordered list

2. item 2

+ sub-item 1

+ sub-item 2Output:

- ordered list

- item 2

- sub-item 1

- sub-item 2

💡 Your turn to practice this syntax in your document by:

- Including a 2-level ordered list under the second level 1 header

- Checking your code by knitting the document

3.2.9 Italicize and Bold Words

The following syntax renders text in italics, bold, or both italics and bold:

#Syntax for italics

*italics*

#Syntax for bold

**bold**

#Syntax for italic and bold

***italic and bold***Output:

- Italic

- Bold

- Italic and bold

💡 Your turn to practice this syntax in your document by:

- Writing a sentence in your R Markdown document that includes an italicized word

- Writing a sentence that includes a word or phrase in bold

- Writing a sentence where a word or phrase is both bold and italicized

- Checking your code by knitting the document

3.2.10 Create Hyperlinks

Adding hyperlinks to your documents support reproducible science and it can be easily done with the following syntax:

#Syntax to add hyperlink

[text](link)

#Example to provide hyperlink to RStudio

[RStudio](https://www.rstudio.com)When knitted, the example with RStudio turns like that RStudio.

💡 Your turn to practice this syntax in your document by:

- Creating a hyperlink to the Boise State website

- Creating a hyperlink to download R for Mac computers

- Checking your code by knitting the document

3.2.11 Include Code Chunks

One of the most exciting features of working with the R Markdown format is the ability to directly embed the output of R code into the compiled document (see Figure 3.6).

In other words, when you compile your .Rmd file, R Markdown will automatically execute each code chunk and inline code expression (see example below), and insert their results into the final document.

If the output is a table or a figure, you can assign a label to it (by adding metadata within the code chunk; see Part B) and refer to it later in your PDF or HTML document. This process—known as cross-referencing—is made possible through the \@ref() function, which is implemented in the R bookdown package.

3.2.11.1 Code Chunk

A code chunk can be easily inserted into your document using one of the following methods:

- Using the keyboard shortcut Ctrl + Alt + I (on macOS: Cmd + Option + I).

- Clicking the

Insertbutton in the editor toolbar.

in the editor toolbar. - Typing

{r} to open the chunk andto close it.

By default, the code chunk expects R code, but you can also insert chunks for other programming languages (e.g., Bash, Python).

💡 Your turn to practice this syntax in your document by:

- Creating a code chunk in your R Markdown document that prints the message “Hello world” when you knit the document

3.2.11.2 Chunk Options

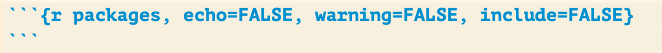

Chunk output can be customized with knitr options arguments set in the {} of a code chunk header. In the examples displayed in Figure 3.8 five arguments are used:

include = FALSEprevents code and results from appearing in the finished file. R Markdown still runs the code in the chunk, and the results can be used by other chunks.echo = FALSEprevents code, but not the results from appearing in the finished file. This is a useful way to embed figures.message = FALSEprevents messages that are generated by code from appearing in the finished file.warning = FALSEprevents warnings that are generated by code from appearing in the finished.fig.cap = "..."adds a caption to graphical results.

We will delve more into chunk options in part D of chapter 2, but in the meantime please see the R Markdown Reference Guide for more details.

Figure 3.8: Example of code chunks.

💡 Your turn to practice this syntax in your document by:

- Editing the code chunk you created earlier (that prints “Hello world”) so that the code is hidden when you knit the document, but the output is still displayed

3.2.12 Inline Code

Code results can also be inserted directly into the text of a .Rmd file by enclosing the code as follows:

# Syntax for inline code

``r 2+2`` # Please amend to include only 1 back tickOutput: 4

R Markdown will always:

- Display the results of inline code, but not the code.

- Apply relevant text formatting to the results.

As a result, inline output is indistinguishable from the surrounding text.

Warning: Inline expressions do not take knitr options and is therefore less versatile. We usually use inline code to perform simple stats (e.g. 4x4; 16)

💡 Your turn to practice this syntax in your document by:

- Editing a sentence in your R Markdown text to include the output of the line of code 2 + 2, so that the result is automatically displayed when the document is knitted

3.2.13 Check Spelling

There are three ways to access spell checking in an R Markdown document in RStudio:

- A spell check button

to the right of the save button.

to the right of the save button. Edit > Check Spelling...- The F7 key.

3.3 PART B: Setting Your R Markdown Document

3.3.1 Introduction

The aim of this tutorial is to develop an R Markdown document that can be used as a template to produce your reproducible reports.

We will focus on learning how to configure your document to manage software dependencies, set global parameters for knitting, and produce appendices with software citations, package version information, and details about the operating system used to generate the reproducible report.

3.3.2 Learning Outcomes

This tutorial provides students with the opportunity to learn how to:

- Study and understand the structure of an R Markdown document

- Reinforce your skills in creating R Markdown documents within dedicated project folders

- Define parameters in the YAML metadata section to control the formatting of the knitted document

- Install R dependencies and load packages needed to produce your document

- Generate a citation file for the R packages used in the document

- Generate an appendix with citations for all R packages

- Generate an appendix with R package versions used to produce the document

3.3.3 Structure of an R Markdown Document

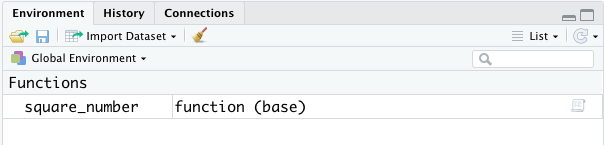

To facilitate the teaching of the learning outcomes, a roadmap of the R Markdown file (*.Rmd) structure is summarized in Figure 3.9.

To support the reproducibility of your research, we structure the R Markdown file as follows (Figure 3.9):

- The YAML metadata section (at the top of the document) must include information about your bibliography (

*.bib) and citation style language (*.csl) files, both of which must be stored in the working directory. - Two R code chunks are provided just below the YAML metadata section:

- Code chunk #1: Installs and loads packages, and generates a

packages.bibfile (stored in the working directory). - Code chunk #2: Sets global options for code chunks.

- Code chunk #1: Installs and loads packages, and generates a

- The Markdown section beneath the two R code chunks is edited to add headers for the References and Appendices sections.

- Finally, two R code chunks are included in the Appendices section:

- Code chunk #3: Provides citations of software (relying on the output of Code chunk #1) for Appendix 1.

- Code chunk #4: Provides software version information (relying on sessionInfo()) for Appendix 2.

Figure 3.9: Representation of the RMarkdown file structure taught in this class. The following colors represent the three main computing languages found in an RMarkdown document: Black: Markdown (we also sometimes use HTML), Green: YAML, and Blue: R (included in code chunks). See text for more details.

3.3.4 Supporting Files

Please refer to section for more details on supporting files and their locations on the shared Google Drive.

3.3.5 Create Your R Markdown Document

Use the material from the Chapter 2 – Part A and Before Starting sections to create and save your R Markdown document for this tutorial.

💡 Guidelines

- Document title: Chapter 2 - part B

- Document output format: HTML

- File name: Chapter2_partB.Rmd

-

File path:

Reproducible_Science/Chapters/Chapter_2

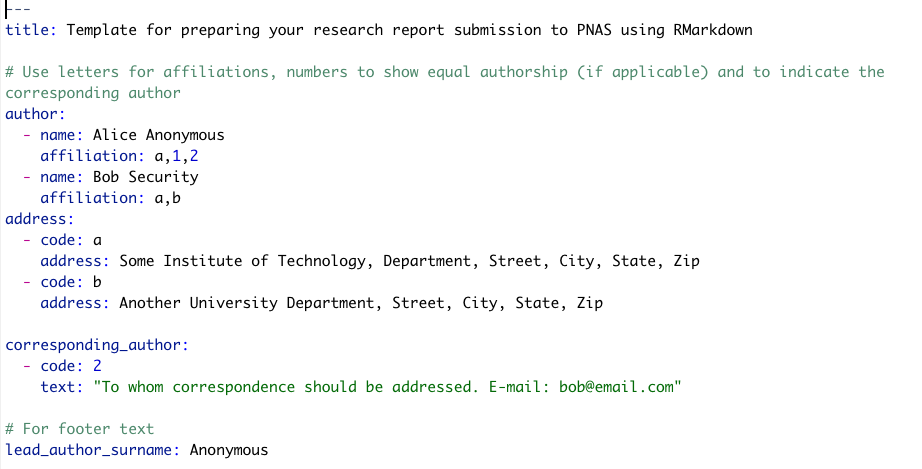

3.3.6 YAML Metadata Section

The YAML metadata section (Figure 3.9) allows users to provide arguments (referred to as fields) that control how an R Markdown document is converted into its final output format. In this class, we will use functions from the knitr (Xie, 2015, 2023b) and bookdown (Xie, 2016, 2023a) packages to populate this section. (Field names, as declared in the YAML metadata section, are provided in parentheses.)

- Title (

title) - Subtitle (

subtitle) - Author(s) (

author) - Date (

date) - Output format(s) (

output) - Citations link (

link-citations) - Font size (

fontsize) - Bibliography file(s) (

bibliography) - Citation style file for journal formatting (

csl)

The YAML code shown below generates either an HTML or PDF document (see the output field), includes a table of contents (see the toc field), and formats in-text citations and the bibliography section according to the journal style specified in the AmJBot.csl file (via the csl field). The bibliography must be stored in a .bib file (in this case, Bibliography_Reproducible_Science_2.bib) placed at the root of your working directory.

---

title: "Your title"

subtitle: "Your subtitle"

author: "Your name"

date: "`r Sys.Date()`"

output:

bookdown::html_document2:

toc: TRUE

bookdown::pdf_document2:

toc: TRUE

link-citations: yes

fontsize: 12pt

bibliography: Bibliography_Reproducible_Science_2.bib

csl: AmJBot.csl

---3.3.6.1 Step-by-Step Procedure

Follow these steps to set up your YAML metadata section (also see Figure 3.9):

- Make sure you have created a document following the approach described here

- Copy the following files, available on Google Drive, into your project folder:

Bibliography_Reproducible_Science_2.bib

AmJBot.csl

- Replace your YAML metadata section (at the top of your R Markdown document) with the code as shown in the section above

- Knit your document and inspect the output file. Read this section to get more details on the knitting procedure.

💡 Info

-

Warning: The

.biband.cslfiles must be stored in the same working directory as your.Rmdfile. -

Rfunctions in the YAML metadata section: This can be done using inline R code syntax. For example, useSys.Date()to automatically add the current date to the output document. -

Bibliography files: You can use multiple bibliography files by specifying them like this:

bibliography: [file1.bib, file2.bib]. -

Omitting parameters in the YAML metadata section: You can omit parameters by commenting them out with a

#at the beginning of the line (equivalent to commenting). - More information: To learn more about YAML and its use in R Markdown, visit this website.

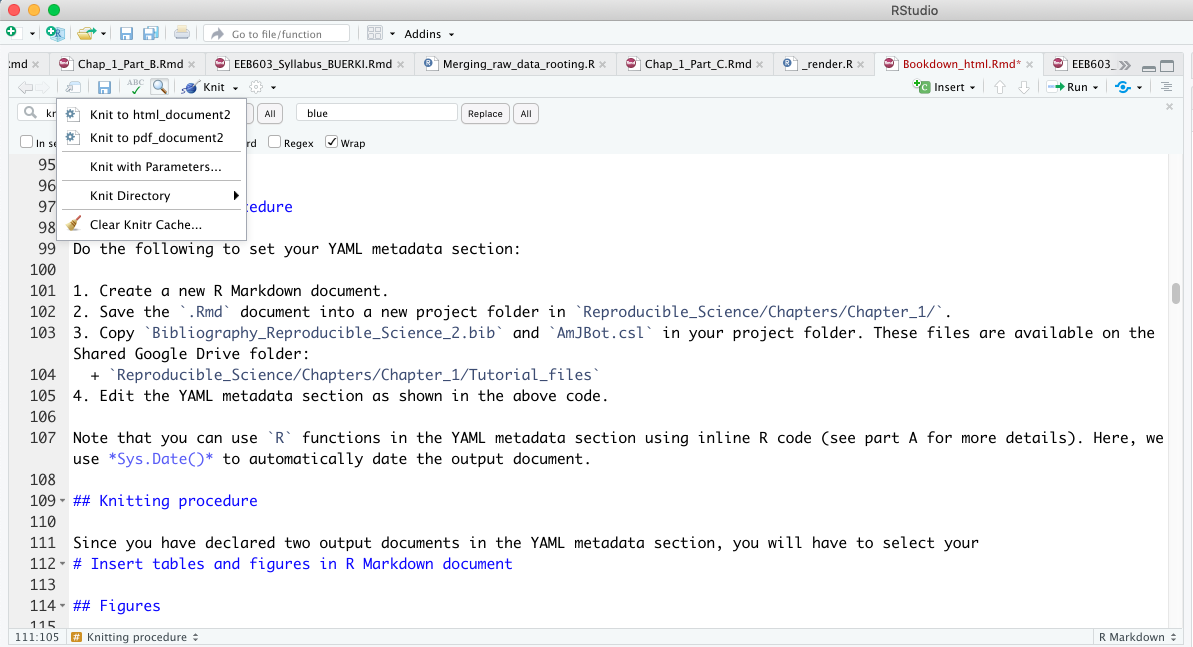

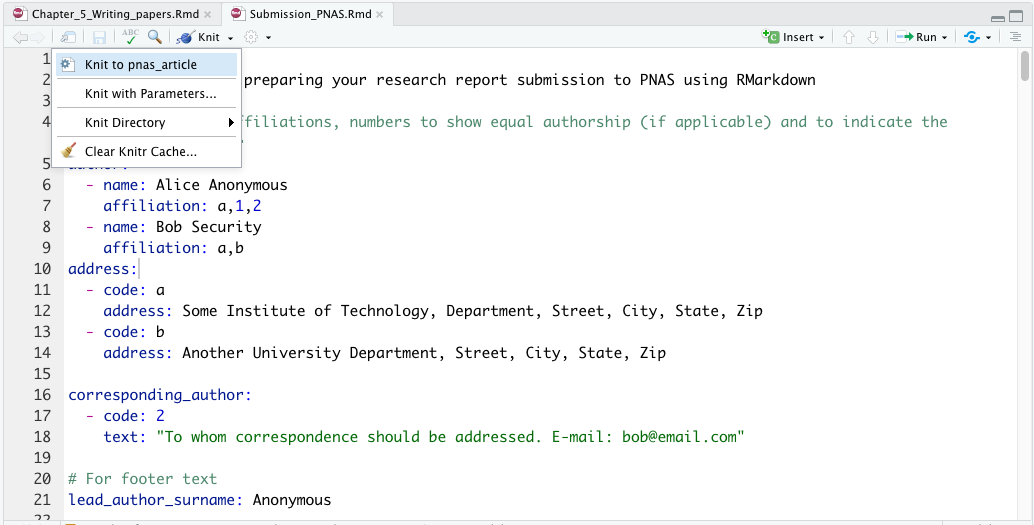

3.3.6.2 Knitting Procedure

Since you have declared two output formats in the YAML metadata section, and both are specific to bookdown functions, you need to select which format you want to use to compile your document. To do this, click the drop-down menu to the left of the Knit button (see Figure 3.10).

To ensure that bookdown functions are applied correctly, make sure to select one of the following options (see Figure 3.10):

Knit to html_document2Knit to pdf_document2

Figure 3.10: Snapshot of RStudio console showing the drop-down list associated to Knit button.

3.3.7 Install R Dependencies and Load Packages

3.3.7.1 Introduction

It is best practice to add an R code chunk directly below the YAML metadata section to automatically install and load the required R packages.

This approach offers two key benefits that support the reproducibility of your research:

- It ensures that all dependencies needed to produce your document are installed and available (see Figure 3.9).

- It allows you to automatically generate a citation file for all R packages used in your report (see below).

💡 Disclaimer

-

Warning: The code provided in this section will install the following

Rpackages on your computer: “knitr”, “rmarkdown”, “bookdown”, “formattable”, “kableExtra”, “dplyr”, “magrittr”, “prettydoc”, “htmltools”, “knitcitations”, “bibtex”, “devtools”. -

More on the

Rpackages: These packages are required to produce your reproducible reports in RStudio. All of them are available on CRAN, the official repository forR. -

Rpackage repositories: Make sure you have set yourRpackage repositories in RStudio before proceeding with this tutorial. You can do this by following this procedure. - Questions or concerns: If you have any questions, please contact the instructor at svenbuerki@boisestate.edu.

3.3.7.2 Step-by-Step Procedure

- Insert an R code chunk

directly below your YAML metadata section (see Figure 3.9).

directly below your YAML metadata section (see Figure 3.9). - Name the code chunk

packagesand set the chunk options as follows (see Figure 3.11):echo = FALSE

warning = FALSE

include = FALSE

Figure 3.11: This is how your packages R code chunk should look like at this stage of the procedure.

- Copy the following code into your R code chunk (Figure 3.11) to install and load the packages required to produce your report:

###~~~

# Load R packages

###~~~

#Create a vector w/ the required R packages

# --> If you have a new dependency, don't forget to add it in this vector

pkg <- c("knitr", "rmarkdown", "bookdown", "formattable", "kableExtra", "dplyr", "magrittr", "prettydoc", "htmltools", "knitcitations", "bibtex", "devtools")

##~~~

#2. Check if pkg are already installed on your computer

##~~~

print("Check if packages are installed")

#This line outputs a list of packages that are not installed

new.pkg <- pkg[!(pkg %in% installed.packages())]

##~~~

#3. Install missing packages

##~~~

# Use an if/else statement to check whether packages have to be installed

# WARNING: If your target R package is not deposited on CRAN then you need to adjust code/function

if(length(new.pkg) > 0){

print(paste("Install missing package(s):", new.pkg, sep=' '))

install.packages(new.pkg, dependencies = TRUE)

}else{

print("All packages are already installed!")

}

##~~~

#4. Load all required packages

##~~~

print("Load packages and return status")

#Here we use the sapply() function to require all the packages

# To know more about the function type ?sapply() in R console

sapply(pkg, require, character.only = TRUE)- Review the R code to ensure you understand what it does and why

- Knit your document and inspect the output file

3.3.8 Generate a Citation File of R Packages Used

3.3.8.1 Introduction

I do not know about you, but I often struggle to properly cite R packages in my publications. Fortunately, R provides a built-in function to help with this. If you want to retrieve the citation for an R package, you can use the base R function citation().

For example, the citation for the knitr package can be obtained as follows:

# Generate citation for knitr Type this code directly in

# the Console

citation("knitr")##

## To cite package 'knitr' in publications use:

##

## Xie Y (2023). _knitr: A General-Purpose Package for Dynamic Report

## Generation in R_. R package version 1.44, <https://yihui.org/knitr/>.

##

## Yihui Xie (2015) Dynamic Documents with R and knitr. 2nd edition.

## Chapman and Hall/CRC. ISBN 978-1498716963

##

## Yihui Xie (2014) knitr: A Comprehensive Tool for Reproducible

## Research in R. In Victoria Stodden, Friedrich Leisch and Roger D.

## Peng, editors, Implementing Reproducible Computational Research.

## Chapman and Hall/CRC. ISBN 978-1466561595

##

## To see these entries in BibTeX format, use 'print(<citation>,

## bibtex=TRUE)', 'toBibtex(.)', or set

## 'options(citation.bibtex.max=999)'.If you want to generate those citation entries in BibTeX format, you can pass the object returned by citation() to toBibtex() as follows:

# Generate citation for knitr in BibTex format Note that

# there is no citation identifiers. Those will be

# automatically generated in our next code.

utils::toBibtex(utils::citation("knitr"))## @Manual{,

## title = {knitr: A General-Purpose Package for Dynamic Report Generation in R},

## author = {Yihui Xie},

## year = {2023},

## note = {R package version 1.44},

## url = {https://yihui.org/knitr/},

## }

##

## @Book{,

## title = {Dynamic Documents with {R} and knitr},

## author = {Yihui Xie},

## publisher = {Chapman and Hall/CRC},

## address = {Boca Raton, Florida},

## year = {2015},

## edition = {2nd},

## note = {ISBN 978-1498716963},

## url = {https://yihui.org/knitr/},

## }

##

## @InCollection{,

## booktitle = {Implementing Reproducible Computational Research},

## editor = {Victoria Stodden and Friedrich Leisch and Roger D. Peng},

## title = {knitr: A Comprehensive Tool for Reproducible Research in {R}},

## author = {Yihui Xie},

## publisher = {Chapman and Hall/CRC},

## year = {2014},

## note = {ISBN 978-1466561595},

## }💡 Info

-

Note that both

citation()andtoBibtex()are functions in the utils R package. -

To make sure that you are calling the right R function, you can add the package

name in front of the function, followed by

::. In this example, the code is as follows:utils::toBibtex(utils::citation(“knitr”)).

3.3.8.2 Step-by-Step Procedure

Here, you will edit the packages code chunk to output a .bib file containing references for all R packages used to generate your document. This file will be included in the appendix 1 presented in the next section.

This can be done by adding the following code at the end of your packages R code chunk:

# Generate BibTex citation file for all loaded R packages

# used to produce report Notice the syntax used here to

# call the function

knitr::write_bib(.packages(), file = "packages.bib")The .packages() function returns invisibly the names of all packages loaded in the current R session (to see the output, use .packages(all.available = TRUE)). This ensures that all packages used in your code will have their citation entries written to the .bib file.

Finally, to be able to cite these references (see Citation identifier) in your text, you need to edit the YAML metadata section. See Appendix 1 for a full list of references associated with the R packages used to generate this report.

💡 Your turn to practice this syntax in your document by:

-

Adding the code to generate

packages.bibin your R code chunk entitledpackages

. -

Knitting your document and inspecting your project folder to observe the creation of a new file entitled

packages.bib.

3.3.9 Generate Appendix with Citations of Used R Packages

Although a References section will be provided at the end of your document to cite in-text references (see References and Figure 3.9), it is useful to create a customized appendix listing citations for all R packages used to conduct the research, as shown in Appendix 1.

Here, we will learn the procedure to assemble such an appendix.

3.3.9.1 Step-by-Step Procedure

- Add a level-1 header at the end of your document titled “References”.

- Include the appendices after the References section (see Figure 3.9) by following the steps below:

- Insert

<div id="refs"></div>as shown below. This ensures that the appendices (or any other material) appear after the References section.

(See this resource for more details.)

- Insert

# References

<div id="refs"></div>

# (APPENDIX) Appendices {-}

# Appendix 1

Citations of all R packages used to generate this report.- Insert an R code chunk

directly below

directly below # Appendix 1to read and print the citations saved inpackages.bib. Use the following code:

### Load R package

library("knitcitations")

### Process and print citations in packages.bib Clear all

### bibliography that could be in the cash

cleanbib()

# Set pandoc as the default output option for bib

options(citation_format = "pandoc")

# Read and print bib from file

read.bibtex(file = "packages.bib")- Edit your R code chunk options as follows to correctly print the references:

{r generateBibliography, results = "asis", echo = FALSE, warning = FALSE, message = FALSE} - Knit your document to check that it produces the correct output (see Knitting procedure). Refer to Appendix 1 to see an example of the expected result.

3.3.10 Generate Appendix with R Package Versions and Operating System

In addition to citing R packages, you may also want to provide detailed information about the R package versions and operating system used (see Figure 3.9).

In R, the simplest — yet highly useful and important — way to document your environment is to include the output of sessionInfo() (or devtools::session_info()). Among other details, this output shows all loaded packages and their versions from the R session used to run your analysis.

Providing this information allows others to reproduce your work more reliably, as they will know exactly which packages, versions, and operating system were used to execute the code.

For example, here is the output of sessionInfo() showing the R version and packages I used to create this document:

# Collect Information About the Current R Session

sessionInfo()## R version 4.2.0 (2022-04-22)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur/Monterey 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] klippy_0.0.0.9500 rticles_0.27 DiagrammeR_1.0.11

## [4] DT_0.34.0 data.tree_1.2.0 kfigr_1.2.1

## [7] devtools_2.4.5 usethis_3.2.1 bibtex_0.5.1

## [10] knitcitations_1.0.12 htmltools_0.5.7 prettydoc_0.4.1

## [13] magrittr_2.0.3 dplyr_1.1.4 kableExtra_1.4.0

## [16] formattable_0.2.1 bookdown_0.36 rmarkdown_2.29

## [19] knitr_1.44

##

## loaded via a namespace (and not attached):

## [1] Rcpp_1.0.11 svglite_2.1.3 lubridate_1.9.3 visNetwork_2.1.4

## [5] assertthat_0.2.1 digest_0.6.33 mime_0.12 R6_2.6.1

## [9] plyr_1.8.9 backports_1.4.1 evaluate_1.0.5 highr_0.11

## [13] httr_1.4.7 pillar_1.11.1 rlang_1.1.2 rstudioapi_0.17.1

## [17] miniUI_0.1.2 jquerylib_0.1.4 urlchecker_1.0.1 RefManageR_1.4.0

## [21] stringr_1.5.2 htmlwidgets_1.6.4 shiny_1.7.5.1 compiler_4.2.0

## [25] httpuv_1.6.13 xfun_0.41 pkgconfig_2.0.3 systemfonts_1.0.5

## [29] pkgbuild_1.4.8 tidyselect_1.2.0 tibble_3.2.1 viridisLite_0.4.2

## [33] later_1.3.2 jsonlite_1.8.8 xtable_1.8-4 lifecycle_1.0.4

## [37] formatR_1.14 scales_1.4.0 cli_3.6.2 stringi_1.8.3

## [41] cachem_1.0.8 farver_2.1.1 fs_1.6.3 promises_1.2.1

## [45] remotes_2.5.0 xml2_1.3.6 bslib_0.5.1 ellipsis_0.3.2

## [49] generics_0.1.4 vctrs_0.6.5 RColorBrewer_1.1-3 tools_4.2.0

## [53] glue_1.6.2 purrr_1.0.2 crosstalk_1.2.2 pkgload_1.4.1

## [57] fastmap_1.1.1 yaml_2.3.8 timechange_0.2.0 sessioninfo_1.2.3

## [61] memoise_2.0.1 profvis_0.3.8 sass_0.4.83.3.10.1 Step-by-Step Procedure

I have also used the approach described above to add this information in Appendix 2. This can be done as follows:

- Edit your document to add the following text below Appendix 1.

# Appendix 2

Version information about R, the operating system (OS) and attached or R loaded packages. This appendix was generated using `sessionInfo()`.- Then, add an R code chunk

and set its options as follows to correctly print the content:

and set its options as follows to correctly print the content:

{r sessionInfo, eval = TRUE, echo = FALSE, warning = FALSE, message = FALSE} - Copy this code in your code chunk:

# Load and provide all packages and versions

sessionInfo()- Knit your document to check that it produces the correct output.

3.3.11 All Set — You’re Good to Go!

You have now set up your R Markdown environment and are ready to start populating it! This means you can begin inserting your text and additional code chunks directly below the packages code chunk.

The References section marks the end of the main body of your document. If you wish to add appendices, do so under Appendix 2. Note that appendices will be labeled differently from the main sections of the document.

💡 Info

- This R Markdown document will be used as a template for the rest of Chapter 2.

- This document also serves as a foundation for your Bioinformatics Tutorial.

3.4 PART C: Tables, Figures and References

3.4.1 Introduction

The aim of this tutorial is to provide students with the expertise to generate reproducible reports using bookdown (Xie, 2016, 2023a) and related R packages (see Appendix 1 for a full list). Unlike the functions implemented in the R rmarkdown package (Xie et al., 2018, which is better suited for generating PDF reproducible reports), bookdown allows the use of one unified set of functions to generate both HTML and PDF documents.

In addition, the same approach and functions are used to process tables and figures, as well as to cross-reference them in the main body of the text. This tutorial will also cover how to cite references in the text, automatically generate a references section, and format citations according to journal styles.

3.4.2 Learning Outcomes

This tutorial provides students with the opportunity to learn how to:

- Insert tables in an R Markdown document

- Insert figures in an R Markdown document

- Cross-reference tables and figures in the text

- Cite references in the text

- Generate a References section

- Format citations according to journal style

3.4.3 Create Your R Markdown

Follow the approach described in the box below to create your R Markdown file for Chapter 2 – Part C.

💡 Approach to Create Your R Markdown

-

Create document: Make a copy of

Chapter2_partB.Rmdin the same project folder and rename itChapter2_partC.Rmd - Download document: If you have any issues with Chapter 2 - part B, then download the instructor version on Google Drive

-

Document title: Open the file in RStudio and edit the title in the YAML section to

Chapter 2 – Part C - Test document: Knit your document to ensure it works properly

3.4.4 Insert Tables

This tutorial introduces key concepts related to table creation in R Markdown, specifically the following:

- Creating a table based on R code

- Assigning a table caption

- Providing a unique label to the R code chunk for cross-referencing in the text

- Displaying the table in the document

More details on this topic will be provided in Chapter 9.

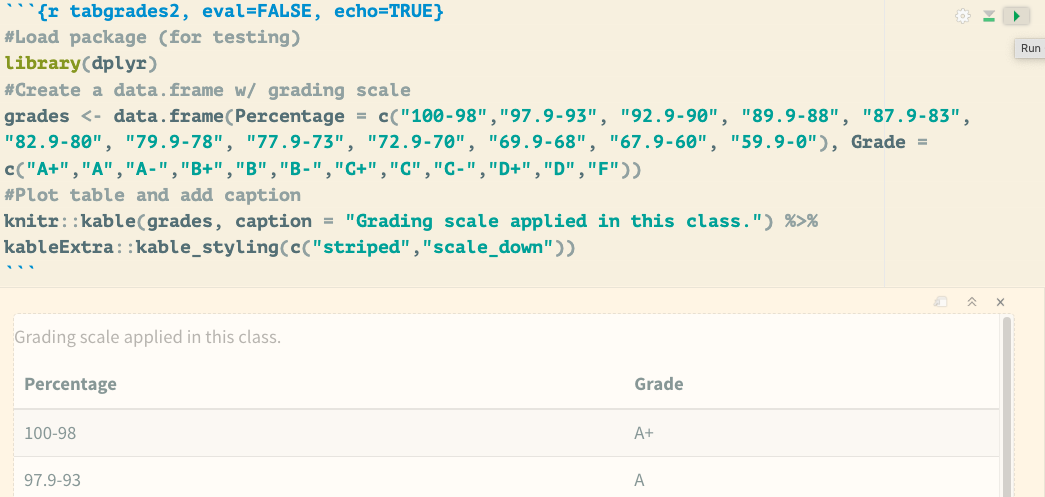

In this section, you will learn the R Markdown syntax and R code needed to replicate the grading scale presented in the syllabus (see Table 3.1).

| Percentage | Grade |

|---|---|

| 100-98 | A+ |

| 97.9-93 | A |

| 92.9-90 | A- |

| 89.9-88 | B+ |

| 87.9-83 | B |

| 82.9-80 | B- |

| 79.9-78 | C+ |

| 77.9-73 | C |

| 72.9-70 | C- |

| 69.9-68 | D+ |

| 67.9-60 | D |

| 59.9-0 | F |

3.4.4.1 Step-by-Step Protocol (10 minutes)

Follow these steps to reproduce Table 3.1 in your R Mardown document:

- Open

Chapter2_partC.Rmdin RStudio.

- Add a first-level header titled

Tablesbelow thepackagescode chunk.

- Insert an R code chunk under your header by clicking the

Insertbutton in the editor toolbar.

in the editor toolbar.

- Copy and paste the following R code into your code chunk:

### Load package (for testing)

library(dplyr)

### Create a data.frame w/ grading scale

grades <- data.frame(Percentage = c("100-98", "97.9-93", "92.9-90",

"89.9-88", "87.9-83", "82.9-80", "79.9-78", "77.9-73", "72.9-70",

"69.9-68", "67.9-60", "59.9-0"), Grade = c("A+", "A", "A-",

"B+", "B", "B-", "C+", "C", "C-", "D+", "D", "F"))

### Plot table and add caption

knitr::kable(grades, caption = "Grading scale applied in this class.",

format = "html") %>%

kableExtra::kable_styling(c("striped", "scale_down"))- Edit the R code chunk options line by adding the following argument (Note: each argument should be separated by a comma):

echo = FALSE

- Add the unique label

tabgradesin the chunk options line (immediately after{r) to enable cross-referencing.

- Test your R code to ensure it produces the expected table by clicking the

Playbutton at the top left of the code chunk (see Fig. 3.12).

Figure 3.12: Demonstrates how to test your code within the R Markdown document. Click the play icon in the top-right corner of the code chunk to execute the code and return its output directly in the R Markdown document without knitting.

- Knit your document using the

Knitbutton in the editor toolbar (see Figure 3.10) to inspect the output and check your syntax and code.

in the editor toolbar (see Figure 3.10) to inspect the output and check your syntax and code.

3.4.4.1.1 Challenge (10 minutes)

💡 Is something missing?

- Challenge: Scrutinize the code provided in this section to determine how the table caption is created.

- Test Your Hypothesis: Once you have figured out how the caption is assigned, test your hypothesis by editing it and knitting your document.

3.4.4.2 Double “Table” in Caption (10 minutes)

Several students have encountered an issue with duplicate table labels in the caption. The instructor did a web search and found a possible solution to help debug the issue:

- Problem: When using

kableExtra::kbl()orknitr::kable()in combination withkableExtrafunctions, you might see a caption like “Table Table X: Your Caption”. - Solution: Specify the

formatargument inknitr::kable()(e.g.,format = "html"), or usekableExtra::kbl()directly, which automatically handles the format. This preventskableExtrafrom re-interpreting a markdown table as a new table and adding an extra “Table” prefix.

In our case, we could try editing the code as follows (two options):

### Load package (for testing)

library(dplyr)

### Create a data.frame w/ grading scale

grades <- data.frame(Percentage = c("100-98", "97.9-93", "92.9-90",

"89.9-88", "87.9-83", "82.9-80", "79.9-78", "77.9-73", "72.9-70",

"69.9-68", "67.9-60", "59.9-0"), Grade = c("A+", "A", "A-",

"B+", "B", "B-", "C+", "C", "C-", "D+", "D", "F"))

### Plot table and add caption

# Option 1

knitr::kable(grades, caption = "Grading scale applied in this class.",

format = "html") %>%

kableExtra::kable_styling(c("striped", "scale_down"))

# Option 2

kableExtra::kbl(grades, caption = "Grading scale applied in this class.") %>%

kableExtra::kable_styling(c("striped", "scale_down"))The instructor encourages students to test the options proposed above in their own documents and determine whether they resolve the issue.

3.4.5 Insert Figures

This tutorial introduces key concepts related to figure creation in R Markdown, specifically the following:

- Creating a figure based on R code.

- Assigning a figure caption.

- Providing a unique label to the R code chunk allowing further cross-referencing in the text.

- Displaying the figure in the document.

More details on this topic will be provided in Chapter 10.

In this section, you will learn the R Markdown syntax and R code needed to replicate Figure 3.13.

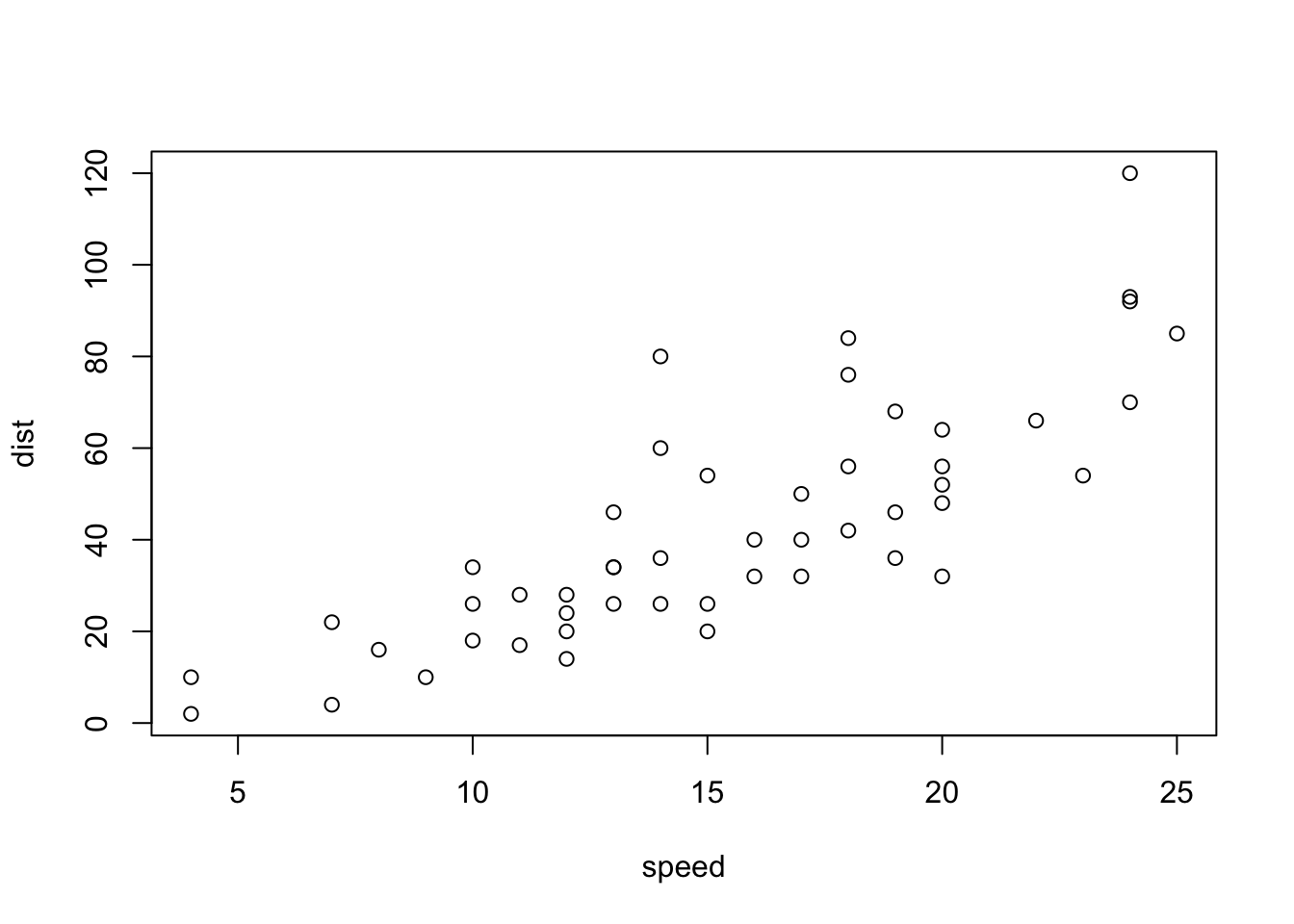

Figure 3.13: Plot of cars’ speed in relation to distance.

3.4.5.1 Step-by-Step Protocol (10 minutes)

Follow these steps to reproduce Figure 3.13 in your R Markdown document:

- If not done yet, open

Chapter2_partC.Rmdin RStudio. - Add a first-level header titled

Figuresbelow theTablesheader.

- Insert an R code chunk under your header by clicking the

Insertbutton in the editor toolbar.

in the editor toolbar. - Copy and paste the following R code into your code chunk:

### Load and summarize the cars dataset

summary(cars)

### Plot data

plot(cars)- Edit the R code chunk options line by adding the following argument (Note: each argument should be separated by a comma):

echo = FALSEresults = "hide"fig.cap = "Plot of cars' speed in relation to distance."out.width = "100%"

- Add the unique label

carsin the chunk options line (immediately after{r) to enable cross-referencing. - Test your R code to ensure it produces the expected table by clicking the

Playbutton at the top left of the code chunk (as done in Tables). - Knit your document using the

Knitbutton in the editor toolbar (see Figure 3.10) to inspect the output and check your syntax and code.

in the editor toolbar (see Figure 3.10) to inspect the output and check your syntax and code.

3.4.5.1.1 Challenge (10 minutes)

💡 Challenge

- What syntax is used to assign a figure caption?

- How does the syntax for assigning captions to tables and figures compare?

3.4.6 Cross-reference Tables and Figures in the Text

Cross-referencing tables and figures in the main body of your R Markdown document can easily be done by using the \@ref() function implemented in the bookdown package (see Figure 3.9).

3.4.6.1 General Syntax

The general syntax is as follows:

# Cross-referencing tables in main body of text

Table \@ref(tab:code_chunk_ID)

# Cross-referencing figures in main body of text

Figure \@ref(fig:code_chunk_ID)💡 More Info on the Syntax

-

It is worth mentioning that you need to manually add

TableorFigurein front of@ref(tab:code_chunk_ID)or@ref(fig:code_chunk_ID). This design allows the user to choose how tables and figures are referenced in the text. Journals often have distinct formatting styles, which must be followed during submission.

3.4.6.2 Step-by-Step Procedure (10 minutes)

- To cross-reference your table (labeled

tabgrades) in the text, follow these steps:- Type the following sentence below your

Tablesheader:

The grading scale presented in the syllabus is available in Table \@ref(tab:tabgrades). - Knit your document using the

Knitbutton in the editor toolbar (see Figure 3.10) to inspect the output and verify that the syntax and code are working correctly.

in the editor toolbar (see Figure 3.10) to inspect the output and verify that the syntax and code are working correctly.

- Type the following sentence below your

- To cross-reference your figure (labeled

cars) in the text, follow these steps:- Type the following sentence below your

Figuresheader:

The plot of the cars data is available in Figure \@ref(fig:cars). - Knit your document using the

Knitbutton in the editor toolbar (see Figure 3.10) to inspect the output and verify that the syntax and code are working correctly.

in the editor toolbar (see Figure 3.10) to inspect the output and verify that the syntax and code are working correctly.

- Type the following sentence below your

3.4.7 Cite References in the Text

3.4.7.1 Introduction

In this section, we will cover the following topics before delving into the practical implementation of citing references in your R Markdown document:

3.4.7.1.1 The Bibliography File

Pandoc can automatically generate citations in your text and a References section following a specific journal style (see Figure 3.9). To use this feature, you need to declare a bibliography file in the YAML metadata section under the bibliography: field.

In this course, we are working with bibliography files formatted using the BibTeX format. Other formats can also be used — please see this resource for more details.

Most journals allow citations to be downloaded in BibTeX format, but if this feature is not available, you can convert citation formats using online services (e.g., EndNote to BibTeX: https://www.bruot.org/ris2bib/).

3.4.7.1.2 The BibTeX Format

BibTeX is a reference management tool commonly used in conjunction with LaTeX (a Markup language) to format lists of references in scientific documents. It allows users to maintain a bibliographic database (.bib file) and cite references in a consistent and automated way.

Each entry in a .bib file follows this general structure:

Please find below an example of a reference formatted in BibTeX format:

@entrytype{citationIndentifier,

field1 = {value1},

field2 = {value2},

...

}💡 Info on BibTeX format

-

@entrytype: Type of reference (e.g.,@article, @book, @inproceedings). - citationIdentifier: Unique ID used to cite the entry in your R Markdown document.

- fields: Key-value pairs with bibliographic information (e.g., author, title, year).

Here is an example associated with Baker (2016):

# Example of BibTex format for Baker (2016) published in Nature

@Article{Baker_2016,

doi = {10.1038/533452a},

url = {https://doi.org/10.1038/533452a},

year = {2016},

month = {may},

publisher = {Springer Nature},

volume = {533},

number = {7604},

pages = {452--454},

author = {Monya Baker},

title = {1,500 scientists lift the lid on reproducibility},

journal = {Nature},

}3.4.7.1.3 Citation Identifier

The unique citation identifier of a reference (Baker_2016 in the example above) is set by the user in the BibTeX citation file (see the first lines in the examples provided above). This identifier is used to refer to the publication in the R Markdown document and also enables citing references and generating the References section.

3.4.7.2 Step-by-Step Procedures

In this section, we will cover the following topics to implement the tools for references citing in your R Markdown document:

3.4.7.2.1 Steps to Complete Before Citing References in Your Text

- Save all your

BibTeX-formatted references in a bibliography text file, and make sure to add the.bibor.bibtexextension.

- Place the bibliography file in your project folder (alongside your

.Rmdfile).

- Declare the name of your bibliography file in the YAML metadata section under the

bibliography:field.

- References formatted in the

BibTeXformat are available in the following file:Bibliography_Reproducible_Science_2.bib

3.4.7.2.1.1 Challenge (5 minutes)

💡 Download a Citation in BibTeX Format

- Visit this webpage.

-

Locate the

Citeicon and download a citation in.bibtexformat. -

Open the

.bibtexfile in a text editor and inspect its contents. - What is the unique citation identifier of this article?

3.4.7.2.2 Cite References in Your Text

We will examine the syntax for citing references, either as parenthetical citations or in-text citations.

- Parenthetical Citation: The entire citation appears in parentheses, usually at the end of a sentence or clause.

- In-Text Citation: The author’s name is part of the sentence, and the citation appears naturally in the flow of the text.

3.4.7.2.2.1 Parenthetical Citation

In this case, citations are placed inside square brackets ([]) (usually at the end of a sentence or clause) and separated by semicolons. Each citation must include a key composed of @ followed by the citation identifier (as stored in the BibTeX file).

Please find below some examples on citation syntax:

#Syntax

Blah blah [see @Baker_2016, pp. 33-35; also @Smith2016, ch. 1].

Blah blah [@Baker_2016; @Smith2016].Once knitted (using the  button), the citation syntax is rendered as:

button), the citation syntax is rendered as:

Blah blah (see Baker, 2016 pp. 33–35; also Smith et al., 2016, ch. 1).

Blah blah (Baker, 2016; Smith et al., 2016).

A minus sign (-) before the @ symbol will suppress the author’s name in the citation. This is useful when the author is already mentioned in the text:

#Syntax

Baker says blah blah [-@Baker_2016].Once knitted, the citation is rendered as:

Baker says blah blah (2016).

3.4.7.2.2.2 In-Text Citation

In this case, in-text citations can be rendered with the following syntax:

#Syntax

@Baker_2016 says blah.

@Baker_2016 [p. 1] says blah.Once knitted, the citation is rendered as:

Baker (2016) says blah.

Baker (2016 p. 1) says blah.

3.4.7.2.2.3 Challenge (10 minutes)

💡 Your turn to practice citing references in your document by:

- Write a sentence citing @Baker_2016 using parenthetical citation syntax.

- Write a sentence citing @Baker_2016 using in-text citation syntax.

3.4.8 Generate a References Section

Upon knitting, a References section will automatically be generated and inserted at the end of your document (see Figure 3.9).

We recommend adding a level-1 “References” header immediately after the final paragraph of the document, as shown below:

last paragraph...

# ReferencesThe bibliography will be inserted after this header (please see the References section of this tutorial for more details).

3.4.8.1 Challenge (10 minutes)

💡 Your Turn!

- Edit your document to add a References section.

3.4.9 Format Citations to Journal Style

In this section, we will explore how your bibliography can be automatically formatted to match a specific journal style. This is done by specifying a Citation Style Language (CSL) file in the YAML metadata section using the csl: field. The CSL file contains the formatting rules required to style both in-text citations and the bibliography according to the selected journal or publication.

3.4.9.1 What is the Citation Style Language?

The Citation Style Language (CSL) is an open-source project designed to simplify scholarly publishing by automating the formatting of citations and bibliographies. The project maintains a crowdsourced repository of over 8,000 free CSL citation styles. For more information, visit: https://citationstyles.org

3.4.9.2 CSL repositories

There are two main CSL repositories:

- GitHub Repository: https://github.com/citation-style-language/styles

- Zotero Style Repository: https://www.zotero.org/styles

3.4.9.3 Step-by-Step Procedure (10 minutes)

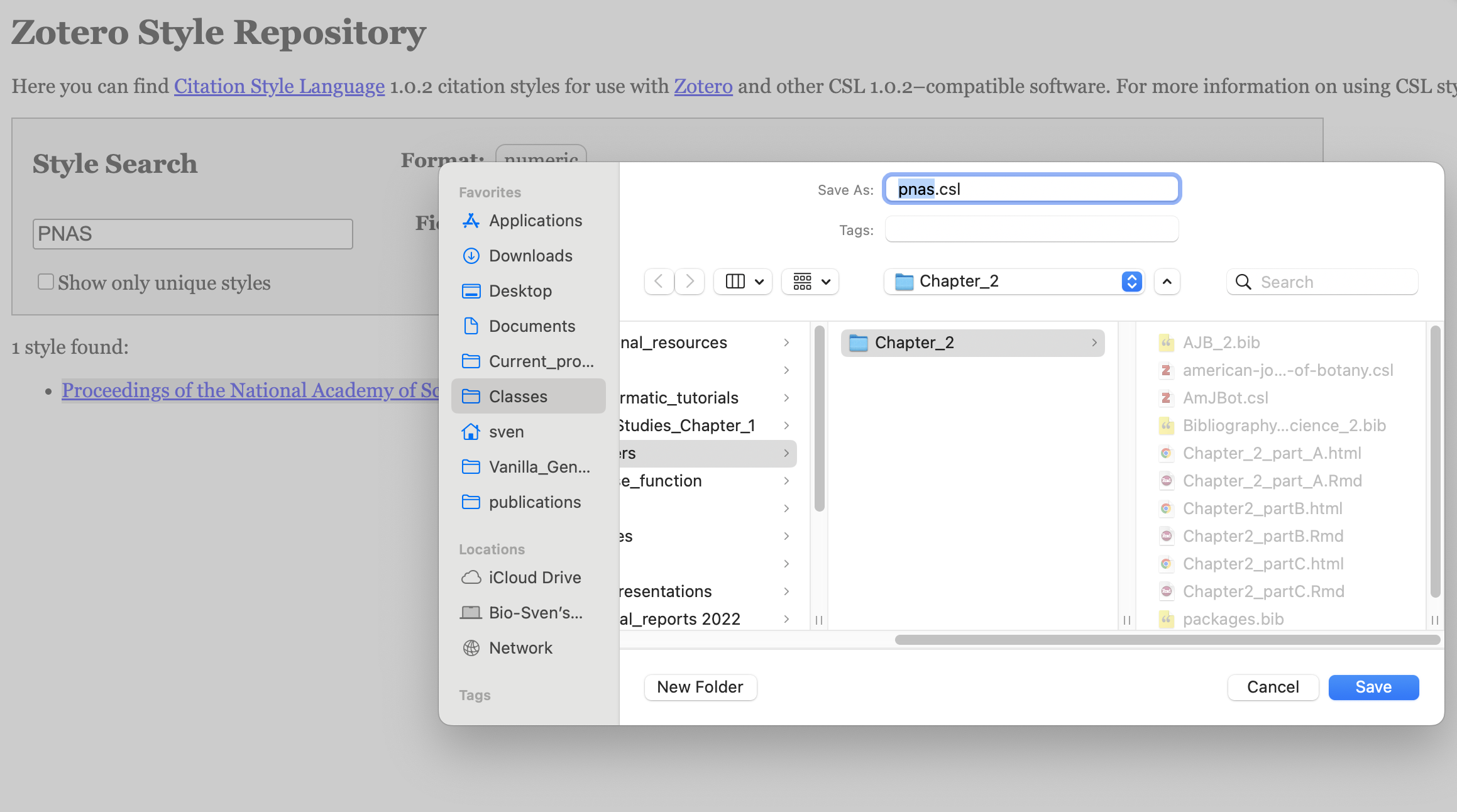

To learn this procedure and syntax, you will be tasked with downloading a .csl file and using it in your document (in place of AmJBot.csl).

Follow these steps to format your citations and bibliography according to a specific citation style (see Figure 3.9 for more details):

- Download a

.cslfile from the Zotero Style Repository.- To do this, type the name of your target journal in the search bar. Then, right-click on the corresponding style and select “Save Link As…” to download the

.cslfile. Save it in your project folder (see Figure 3.14).

Figure 3.14: Procedure to save csl file for PNAS on the Zotero Style Repository.

- To do this, type the name of your target journal in the search bar. Then, right-click on the corresponding style and select “Save Link As…” to download the

- Make sure your

.cslfile is in your project folder (alongside your.Rmdfile).

- Edit your YAML metadata section to specify the name of your

.cslfile. - Knit your document using the

Knit button. The Pandoc program will use the information specified in the YAML metadata section to format both the in-text citations and the bibliography section according to the citation style defined in the CSL file. Be sure to add a

button. The Pandoc program will use the information specified in the YAML metadata section to format both the in-text citations and the bibliography section according to the citation style defined in the CSL file. Be sure to add a Referencesheader at the end of your.Rmddocument.

3.5 PART D: Advanced R and R Markdown settings

3.5.1 Introduction

The aim of this tutorial is to provide an overview of procedures that can be applied to streamline your R Markdown document, supporting both your computing needs and formatting style.

3.5.2 Learning Outcomes

This tutorial provides students with the opportunity to learn how to:

- Set up your working directory

- Understand how to set global options for code chunks related to:

- Implement global options for code chunks

- Practice the global options procedure

3.5.3 Supporting Files

Please refer to section for more details on supporting files and their locations on the shared Google Drive.

3.5.4 Set Up Your Working Directory

Unlike R scripts, where you must set your working directory or provide the path to your files, the approach implemented in an R Markdown document (.Rmd) automatically sets the working directory to the location of the .Rmd file. This behavior is managed by functions from the knitr package.

💡 More Info

-

knitrexpects all referenced files to be located either in the same directory as the.Rmdfile or in a subfolder within that directory. - This setup is designed to enhance the portability of your R Markdown project, which typically consists of multiple related files.

3.5.4.1 Step-by-Step Procedure

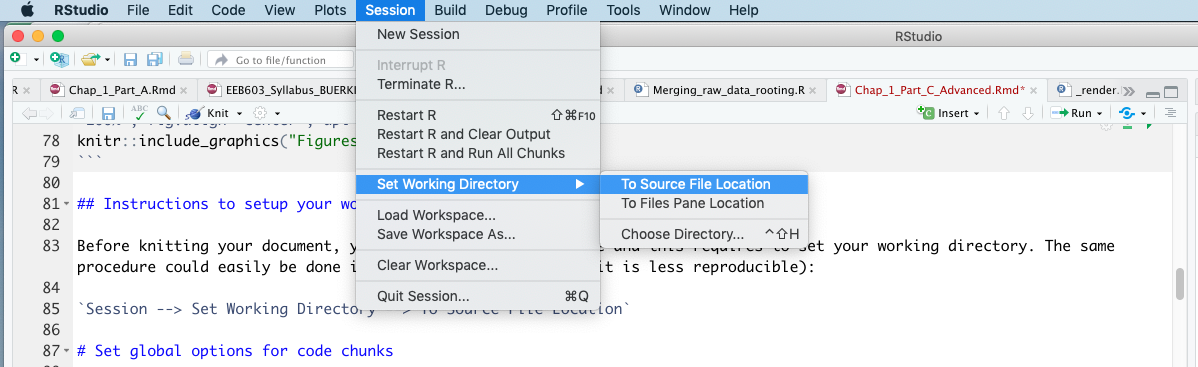

Before knitting your document, you will be testing your code (see Figure 3.12). To ensure smooth code testing, you need to set your working directory. This can be done in RStudio by clicking (see Figure 3.15):

Session --> Set Working Directory --> To Source File Location

Figure 3.15: Snapshot of RStudio showing procedure to set your working directory to allow testing your code prior to knitting.

3.5.5 Set Global Options for Code Chunks

3.5.5.1 Introduction

To improve code reproducibility and efficiency, and to comply with publication requirements, it is customary to include a code chunk at the beginning of your .Rmd file that sets global options for the entire document (see Figure 3.9). These settings relate to the following elements of your code:

These general settings will be configured using the opts_chunk$set() function provided by the knitr package (Xie, 2023b). The following website contains valuable information on code chunk options:

3.5.5.1.1 The opts_chunk$set() Function

The knitr function opts_chunk$set() is used to modify the default global options in an .Rmd document.

Before starting, please note the following important points about the options:

- Chunk options must be written on a single line; no line breaks are allowed within chunk options.

- Avoid using spaces and periods (

.) in chunk labels and directory names. - All option values must be valid R expressions, just as function arguments are written.

We will discuss each part of the settings individually; however, these settings must be combined into a single code chunk in your document named setup (please see below for more details).

3.5.5.2 Text Output

This section covers settings related to the text output generated by code chunks.

Below is an example of options that can be applied across code chunks:

# Setup options for text results

opts_chunk$set(echo = TRUE, warning = TRUE, message = TRUE, include = TRUE)3.5.5.2.1 Explanations of the Code

echo = TRUE: Include all R source code in the output file.warning = TRUE: Preserve warnings (produced bywarning()) in the output, as if running R code in a terminal.message = TRUE: Preserve messages emitted bymessage()(similar to warnings).include = TRUE: Include all chunk outputs in the final output document.

If you want some text results to use different options, please adjust those in their specific code chunks. This comment applies to all other general settings as well.

3.5.5.3 Code Formatting

This section covers settings related to code formatting (that is, how code is displayed in the final html or pdf document) generated by code chunks.

Below is an example of options that can be applied across code chunks:

# Setup options for code formatting

opts_chunk$set(tidy = TRUE, tidy.opts = list(blank = FALSE, width.cutoff = 60),

highlight = TRUE)3.5.5.3.1 Explanations of the Code

tidy = TRUE: UseformatR::tidy_source()to reformat the code. Please see thetidy.optsoption below.tidy.opts = list(blank = FALSE, width.cutoff = 60): A list of options passed to the function specified by thetidyoption. Here, the code is formatted to avoid blank lines and has a width cutoff of 60 characters.highlight = TRUE: Highlight the source code.

3.5.5.4 Code Caching

To compile your .Rmd document faster—especially when you have computationally intensive tasks—you can cache the output of your code chunks. This process saves the results of these chunks, allowing you to reuse the output later without re-running the code.

The knitr package provides options to evaluate cached chunks only when necessary, but this must be set by the user. This procedure creates a unique MD5 digest (a data storage technique) for each chunk to track changes. When the option cache = TRUE (there are other, more granular settings; see below) is set, the chunk will only be re-evaluated in the following scenarios:

- There are no cached results (either this is the first time running or the cached results were moved or deleted).

- The code chunk has been modified.

The following code allows you to implement this caching procedure in your document:

# Setup options for code caching

opts_chunk$set(cache = 2, cache.path = "cache/")3.5.5.4.1 Explanations of the Code

- In addition to

TRUEandFALSE, thecacheoption also accepts numeric values (cache = 0, 1, 2, 3) for more granular control.0is equivalent toFALSE, and3is equivalent toTRUE.- With

cache = 1, the results are loaded from the cache, so the code is not re-evaluated; however, everything else is still executed, including output hooks and saving recorded plots to files.

- With

cache = 2(used here), the behavior is similar to1, but recorded plots will not be re-saved to files if the plot files already exist—saving time when dealing with large plots.

- With

cache.path = "cache/": Specifies the directory where cache files will be saved. You do not need to create the directory manually; knitr will create it automatically if it does not already exist.

3.5.5.5 Plot Output

Plots are a major component of your research and form the basis of your figures. You can take advantage of options provided by the knitr package to produce plots that meet publication requirements. This approach can save valuable time during the writing phase, as it eliminates the need to manually adjust figure size and resolution to comply with journal guidelines.

Below is an example of options that can be applied across code chunks:

# Setup options for plots The first dev is the master for

# the output document

opts_chunk$set(fig.path = "Figures_MS/", dev = c("png", "pdf"),

dpi = 300)3.5.5.5.1 Explanations of the Code

fig.path = "Figures_MS/": Specifies the directory where figures generated by the R Markdown document will be saved. As with caching, this folder does not need to exist beforehand; it will be created automatically. Files will be saved based on the code chunk label and assigned figure number.dev = c("pdf", "png"): Saves each figure in bothpdfandpngformats.dpi = 300: Sets the resolution (dots per inch) for bitmap devices. The resulting image dimensions follow the formula: DPI × inches = pixels. Check the submission guidelines of your target publication to ensure this value meets their requirements.

💡 More Than One Type of Figure

-

It is worth noting that you might use external figures in your

.Rmddocument. To avoid confusion between figures generated by the.Rmdfile and those imported from outside sources, it is best practice to save them in two separate subfolders for more details).

3.5.5.5.2 Additional Plot Options

Some journals have specific requirements for figure dimensions. You can easily set these using the following option:

fig.dim: (NULL; numeric) — If a numeric vector of length 2, it specifiesfig.widthandfig.height. For example:fig.dim = c(5, 7).

The unit for both dimensions is inches.

3.5.5.6 Figure Positioning

Positioning figures close to their corresponding code chunks is important for clarity and reproducibility. This can be controlled by adding another opts_chunk$set() option in your setup code chunk.

Use the fig.pos argument and set it to "H" to enforce figure placement near the relevant code.

## Locate figures as close as possible to requested

## position (=code)

opts_chunk$set(fig.pos = "H")💡 Warning

-

This setting may cause errors when the

.Rmdfile is knitted to apdfdocument. If this occurs, comment out the line of code using#and try knitting again.

3.5.6 Implement Global Options for Code Chunks

In this section, we will combine all the global settings discussed above into a code chunk named setup, which should be placed below the YAML metadata section (see Figure 3.9 for more details on its location).

In addition to containing the global settings, it is advisable to include a code section for loading the required R packages (see Chapter 2 - Part B and Figure 3.9).

Below is the code for the setup code chunk based on the options presented above:

### Load packages Add any packages specific to your code

library("knitr")

library("bookdown")

### Chunk options: see http://yihui.name/knitr/options/ ###

### Text output

opts_chunk$set(echo = TRUE, warning = TRUE, message = TRUE, include = TRUE)

## Code formatting

opts_chunk$set(tidy = TRUE, tidy.opts = list(blank = FALSE, width.cutoff = 60),

highlight = TRUE)

## Code caching

opts_chunk$set(cache = 2, cache.path = "cache/")

## Plot output The first dev is the master for the output

## document

opts_chunk$set(fig.path = "Figures_MS/", dev = c("png", "pdf"),

dpi = 300)

## Figure positioning

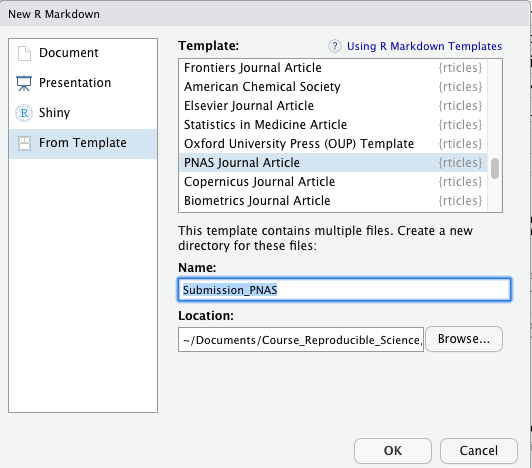

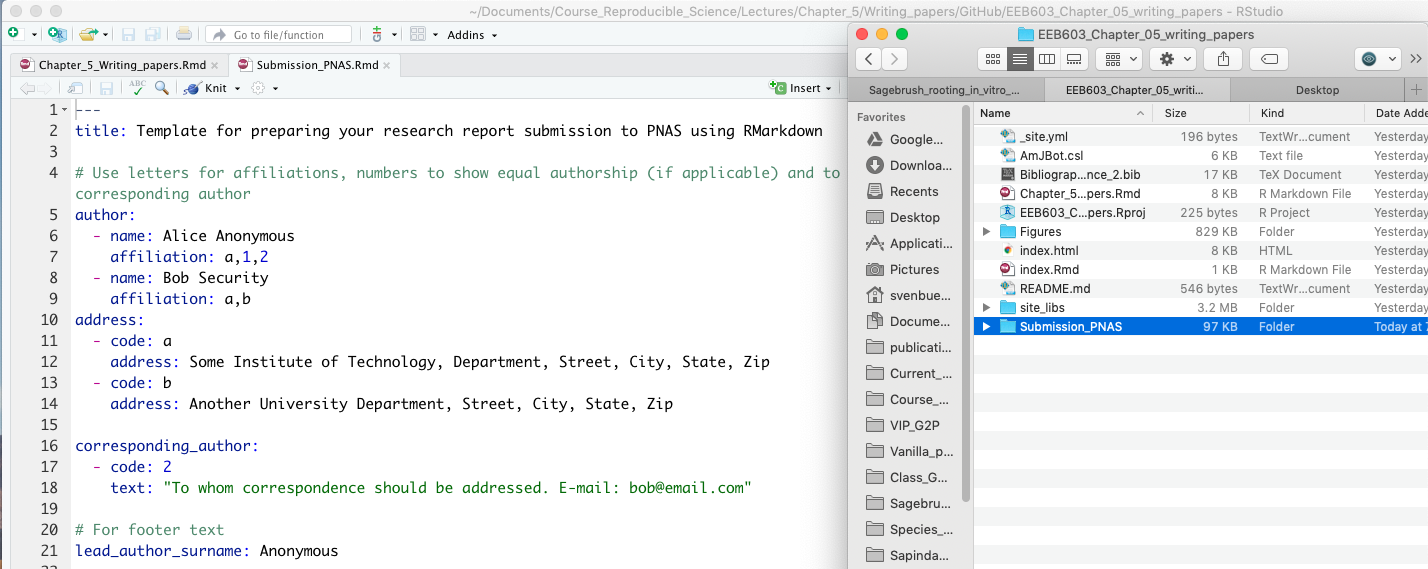

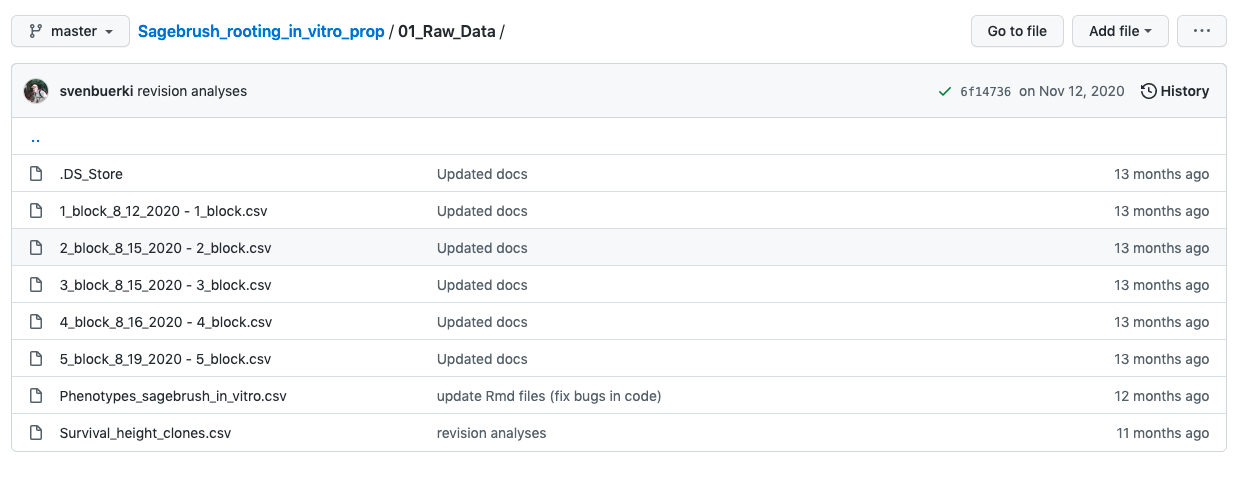

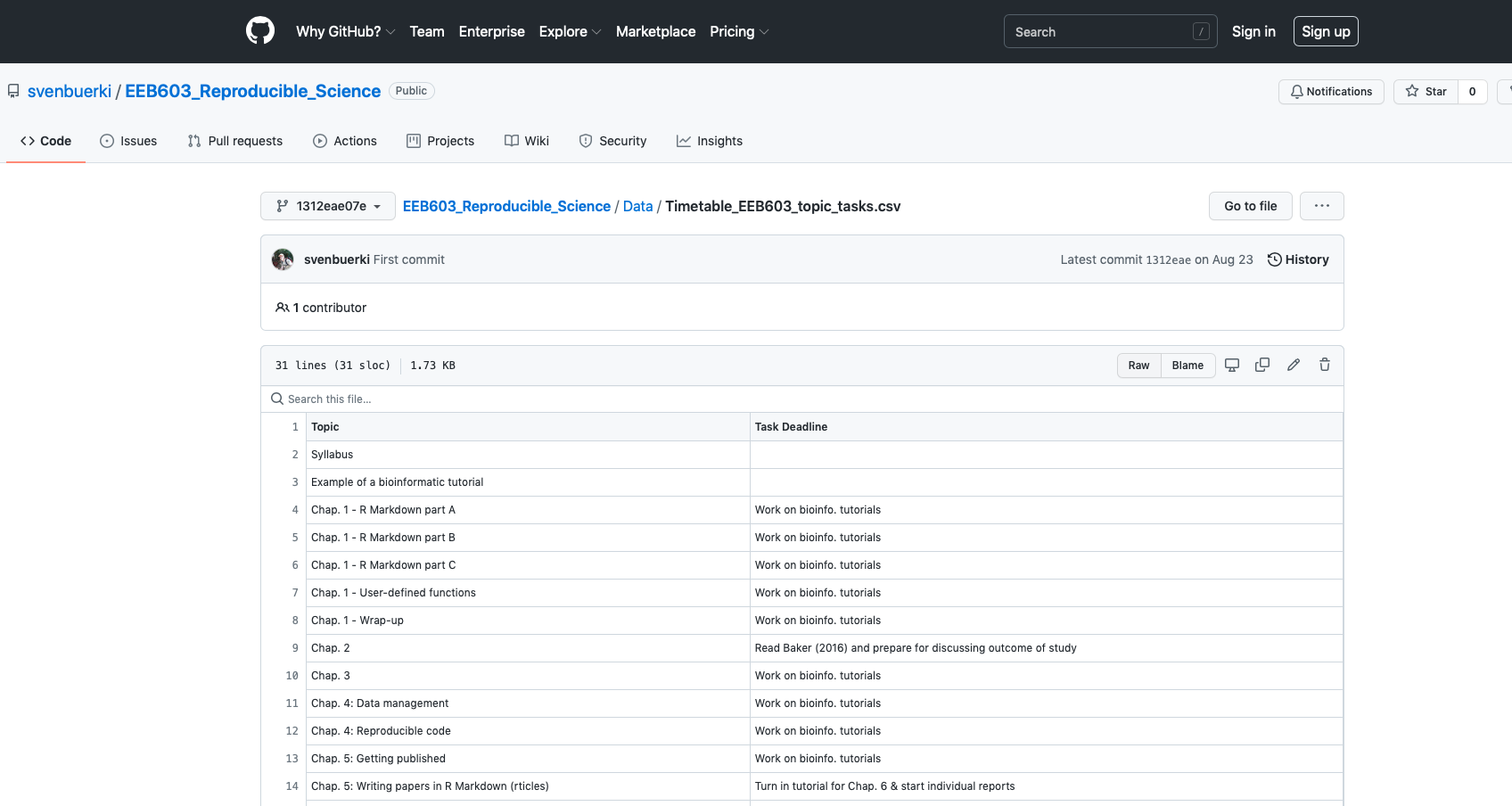

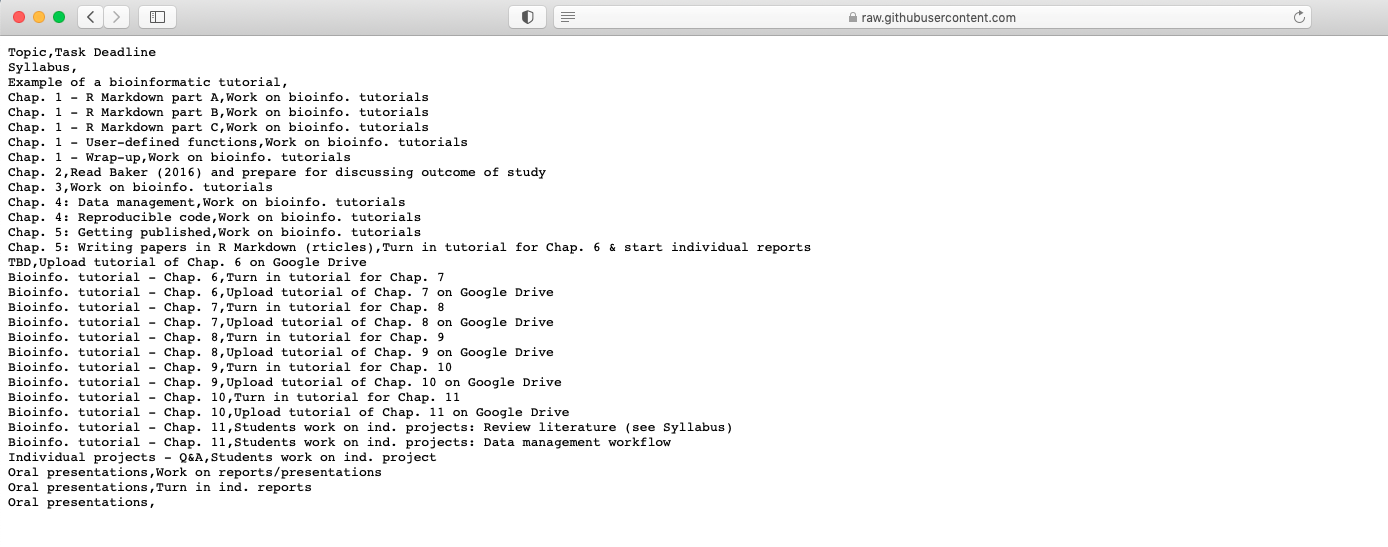

opts_chunk$set(fig.pos = "H")3.5.6.1 Settings of the setup R Code Chunk